You need gain and offset.

But if the output is into an ADC with enough resolution, and the input range is entirely within the output range (as in your example) you can simply connect directly, and apply the required gain and offset in software

Just connect directly.

There's really no need for anything more complex. Subtracting an offset in software is cheap and easy, and has the big advantage that you can use a value below that 4mA level to indicate a break in the current loop and report error, shutdown dangerous machines, etc.

The gain part of gain and offset is just multiplication. (Actually it may not even be that : it may reduce to editing a constant somewhere else in the software).

With both operations, of course, you must take care to handle any overflows or underflows that may occur (especially if you're using a language like C where they can happen silently producing unwanted results).

From comments:

Now we're getting to the stuff that's missing in the question.

Adding expense and complexity when a simpler solution meets your error budget is not optimal. But only you know your error budget; I'm not going to attempt to guess it from the question. You'll also have to justify if analog electronics with what? 1% resistors? will give you a more accurate, or less accurate, solution than using a 4000 step (0.025% error) ADC as a 3000 step (0.03% error) one.

One way of looking at it is taht we are losing 20% of the ADC range.

From another perspective, that is trivial. Each LSB is 0.03% of the remaining scale instead of 0.025%. How much would a couple of resistors cost, that will preserve 0.025% accuracy? (Say, 0.01% each)

- Start with your error budget. What accuracy do you need?

- Then list the error sources and apportion the error budget between them.

- Then minimise overall cost by allocating more of the error budget to the most expensive component to reduce its cost.

If you need better than 1% absolute accuracy you can't use a 160 ohm 1% resistor and a standard 5% 3.3V regulator as the reference voltage.

And if you don't, the loss of ADC range is the least of your concerns.

My suspicion is that you'll find a direct connection from a suitable 160 ohm resistor and NO VOLTAGE DIVIDER into a 12 bit ADC with a suitably accurate Vref is the best, simplest, most accurate and cheapest solution.

Without knowing your error budget, that's just my suspicion.

And finally, from

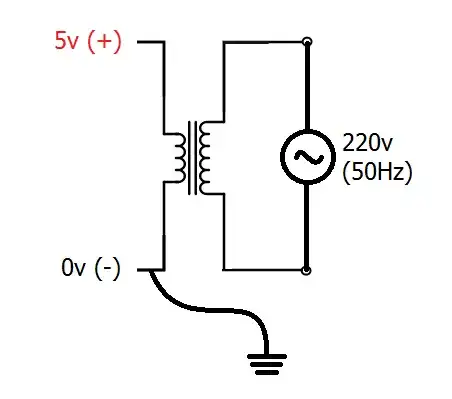

I would be happy if I got 0.1V error. So when I have 4mA. Then I have 0.1V input to the ADC. When I got 20mA, then I got 3.2V input to the ADC

Distinguishing 3.2V (between 3.15 and 3.25) requires 50mV/3.2V or about 1.5% accuracy. You'll need to pay attention to the sense resistor accuracy and the Vref accuracy, and lip service to avoiding DC offsets.

The ADC range is practically irrelevant in comparison. In fact, adding complexity to use the full ADC range, without extraordinarily expensive close tolerance resistors, can only reduce accuracy.