The bit rate V baud rate has been a confusing topic for me lately. I feel like I am clear about the difference between these two.

But going through the definitions of baud rate, I find two different definitions in use:

- A baud rate is the number of times a signal in a communications channel changes state or varies. For example, a 2400 baud rate means that the channel can change states up to 2400 times per second. The term “change state” means that it can change from 0 to 1 or from 1 to 0 up to X (in this case, 2400) times per second. (This is the definition from my teacher's notes.)

- Baud rate as the no. of symbols transmitted per second. Consider a symbol "A" which is encoded as "0100 0001" in ASCII format. If I send this symbol within 1 second, I have bitrate of 8 bits per second.

For baud rate: According to first definition, baud rate is 1 baud per second.

But according to second definition, the baud rate is 3 bauds per second since the signal changes its state three times (0 -> 1 -> 00000 -> 1).

So how do these two definitions link together? I can't see any match between these two definitions with "my" level of knowledge. Thank you!!

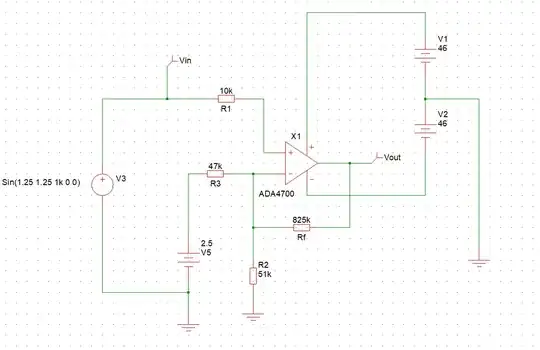

And how is the calculation done in following image? I understand the calculation for the bit rate but how is baud rate calculated?