With reference to this question I asked the other day: How can I measure a voltage between two conductive surfaces that are completely isolated?

There was a good answer there so I didn't want to continue in the comments but It didn't solve the main issue I had which is the 120V potential between the isolated case and the battery terminal. To quickly re-iterate, the battery pack is 350V and there are multiple layers of insulation between the pack and the case.

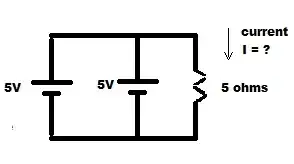

The multimeter used to measure the voltage was a Fluke 87V. This meter has a 10Meg input resistance. I've drawn the below diagram to illustrate this. I don't believe it's accurate because this is not a pure voltage source, more a measured voltage likely due to insulation leakage. Here, Rx is the unknown impedance between the terminal and the case. R1 is the meter input impedance. In this scenario, I measure 120V.

simulate this circuit – Schematic created using CircuitLab

Now, if I connect a 10k resistor across these points to see if there's any power behind this voltage, I now get the following two circuits when I measure voltage & current.

The voltage read is now 0.5V and when putting the meter in series with the 10k resistance, I read 50µA. My guess to what's happening is, the high input impedance of the multimeter is comparable to the insulation impedance between the case and the terminal, in that case, the meter's impedance has a large effect on the circuit, causing this voltage reading. When I connect the 10k Resistance, the voltage collapses by reducing the equivalent parallel resistance.

So my question is, what is actually happening here and is the 120V voltage I'm reading dangerous?