I've learned that for a first order low pass filter (LPF) the 3 dB frequency is one where the amp's gain is (surprise) -3 dB below the DC gain. However, on a second order LPF that is underdamped we get a "peak" at around the cutoff frequency which seems to throw that simple definition off...

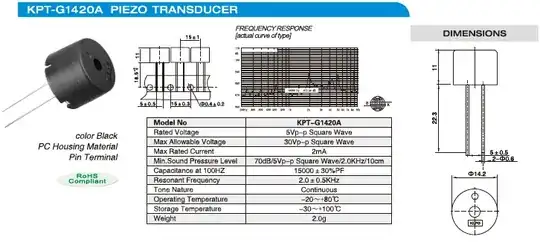

For example, take the following filter:

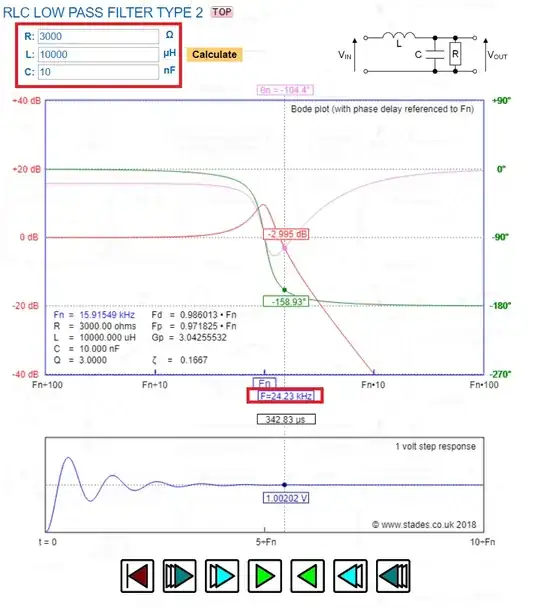

Which has the frequency response of:

$$ H(j\omega) = \frac{1}{1 + j\omega \frac{L}{R} -\omega ^2LC} $$

Running a Pspice simulation to calculate the cutoff frequency nets you the following result:

The gain at that frequency is a curious 6.6 dB. In contrast, for a resistor value of 500 ohms the system becomes critically damped and the cutoff frequency found by the simulation aligns with the simple definition I mentioned above.

How is the cutoff frequency defined in cases such as this? How does one go about justifying this simulation result, analytically calculating the above cutoff frequency?