I’m attempting to build a spectrometer and I’m having difficulty assessing the performance of a particular sensor. I am a novice and other posts on this forum have helped me greatly but I still haven’t been able to arrive at an answer that makes sense. I’d appreciate any help in identifying where I am going wrong. My goal is to assess my photon budget by determining:

- the sensor sensitivity in units of

,

, - the pixel well depth (full well depth) in

, and

, and - ultimately the sensitivity in

.

.

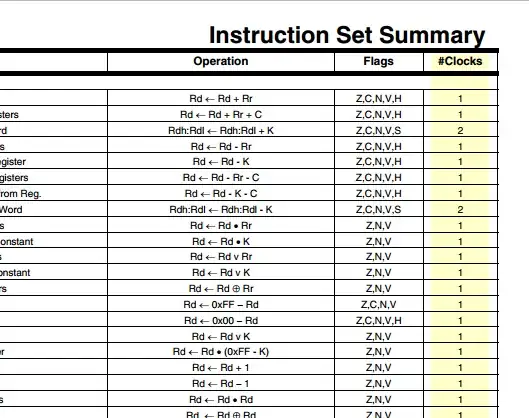

Most sensors I’ve been looking at make it easy enough to calculate this (if I’m doing it right) but for various reasons I’m looking at trying to make the ThorLabs CCS200 work for this application. The datasheet for the sensor, Toshiba TCD1304DG, does not give the chip’s pixel well depth and only offers sensor sensitivity in [V/(lx∙s)]. I’ve found another site, Avantes, that reports the pixel well depth and sensitivity for this chip in [photons/count] but when I try to calculate these values I end up with wildly different answers that I have little confidence in.

Here is the path I’ve been taking (assuming the incoming photon has a wavelength λ = 600 [nm]):

Knowns for the TCD1304DG chip (from the datasheet) and the CCS200 system:

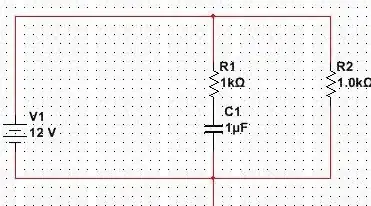

- Sensitivity: 160 [V/(lx∙s)] (page 3)

- Pixel Saturation: 0.600 [V] (page 3)

- Pixel Size: 8 x 200 [μm^2 ] = 1.6E-9 [m^2 ] (page 1)

- Quantum Efficiency: 0.96 [e^-/photon] (at 600 [nm], graph on page 10) (EDIT, not actually Q.E. as pointed out by user1850479)

- Resolution: 16 [bit] (ADC on CCS200 webpage)

Attempted Conversions:

Starting with sensitivity, the conversion from lux to lumen flux is straight forward: [lx]=[lm/m^2 ]. Lumen to watt is much more frustrating for a physics application. Assuming a perfectly narrow band of light at λ = 600 [nm] the conversion from lumen to Watt looks like either η = 431 [lm/W] for a Photopic conversion, or η = 56 [lm/W] for a Scotopic conversion (Luminous Efficacy Tables). I don’t know which type of conversion to use for this application (neither result in an answer consistent with the values given on the Avantes site). I’ll assume Photopic conversion for this post. That leads to:

So the equivalent sensitivity is 160 [V/(lx∙s)] ∙ 431 [lm/W] = 68,960 [(V∙m^2)/J] (which is equivalent to [V/((W∙s)/m^2)]).

The energy of a photon at λ = 600 [nm] is 3.31E-19 [J/photon]. Given the pixel size, the sensitivity becomes:

68,960 [(V∙m^2)/J] ∙ 3.31E-19 [J/photon] ∙ 1/0.95 [photon/e^-] ∙ 1/1.6E-9 [pixel/m^2] = 1.502E-5 [(V∙pixel)/e^-]

With a saturation voltage of 0.600 [V], the full well depth becomes:

0.600 [V] ∙ 1/1.502E-5 [e^-/(V∙pixel)] = 39,947 [e^-/pixel]

For comparison, Avante states the full well depth as 120,000 [e^-/pixel]. I’m off by a factor of 3.

Given an ADC with 16 bit resolution, the change in voltage per count on a given pixel is: 0.600/2^16 = 9.155E-6 [(V∙pixel)/count]

That leads me to a photon per count of: 1/1.502E-5 [e^-/(V∙pixel)]∙ 1/0.95 [photon/e^- ]∙ 9.155E-6 [(V∙pixel)/count]= 0.642 [photon/count]. That doesn’t make sense. Again for comparison, Avante states the sensitivity as 60 [photon/count]. I’m off by two orders of magnitude.

There is something fundamental here that I don’t understand. Any guidance would be greatly appreciated and could help others attempting to analyze similar photon budgets.

UNITS: [ V/((W∙s)/m^2) ], [ V/((W\$\ast\$s)/m^2) ], [ e^- ], [ photons/count ], [ V/(lx∙s) ], [ V/(lx\$\ast\$s) ], [ V/lx\$\ast\$s ], [ lx ], [ lm/m^2 ], [ nm ], [ lm/W ], [ e^-/pixel ]

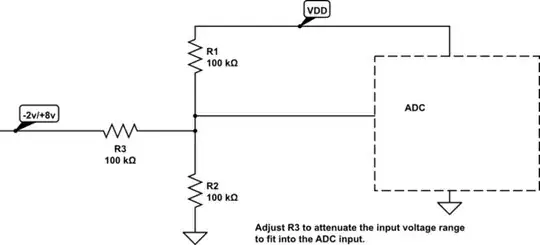

Compact CCD Spectrometer Summary Specs

Avantes Assessment of Detector Specs

EDITED: To improve readability and to include screenshots of highlights in certain links.