I am using a current output DAC connected to a voltage reference and a transimpedance amplifier.

I know the noise characteristics of the voltage reference and the amplifier. The DAC simply lists "13 nV/rtHz output voltage noise density" in its datasheet.

How do I combine these three values to estimate the output voltage noise density of the entire system? I know the DAC must convert the voltage noise of the reference into current noise which is then gained up by the transimpedance amplifier, but how to actually do this calculation is a mystery to me.

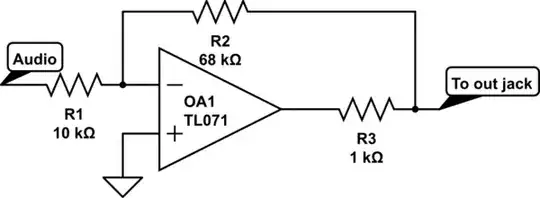

The DAC is an LTC2757. I want to use it in the typical applications manner shown here: