I saw that the Surface table (now called PixelSense) detect objects. The strange thing is that it's basically an IR screen with LCD on bottom. How does it "scan" them then?

Here's a video http://www.youtube.com/watch?v=I0QBivma6j8

I saw that the Surface table (now called PixelSense) detect objects. The strange thing is that it's basically an IR screen with LCD on bottom. How does it "scan" them then?

Here's a video http://www.youtube.com/watch?v=I0QBivma6j8

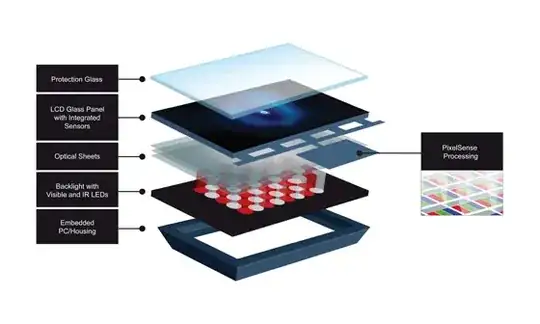

A step-by-step look at how PixelSense works:

- A contact (finger/blob/tag/object) is placed on the display

- IR back light unit provides light (though the optical sheets, LCD and protection glass) that hits the contact.

- Light reflected back from the contact is seen by the integrated sensors

- Sensors convert the light signal into an electrical signal/value

- Values reported from all of the sensors are used to create a picture of what is on the display

- The picture is analyzed using image processing techniques

- The output is sent to the PC. It includes the corrected sensor image and various contact types (fingers/blobs/tags)

Source: Microsoft Pixelsense

Also, take a look at a similar conceptual implementation (i.e., IR-camera-based) by Johnny Lee (who in fact is now in the Microsoft team AFAIK): Tracking Fingers with the Wii Remote

The surface is a vision based system that UI you see is actually projected from below and it's using infrared cameras below the glass to detect touch, objects and those scanned documents you see.

http://msdn.microsoft.com/en-us/library/ee804823(v=surface.10).aspx