Think about it. What exactly do you envision a "256 bit" processor being? What makes the bit-ness of a processor in the first place?

I think if no further qualifications are made, the bit-ness of a processor refers to its ALU width. This is the width of the binary number that it can handle natively in a single operation. A "32 bit" processor can therefore operate directly on values up to 32 bits wide in single instructions. Your 256 bit processor would therefore contain a very large ALU capable of adding, subtracting, ORing, ANDing, etc, 256 bit numbers in single operations. Why do you want that? What problem makes the large and expensive ALU worth having and paying for, even for those cases where the processor is only counting 100 iterations of a loop and the like?

The point is, you have to pay for the wide ALU whether you then use it a lot or only a small fraction of its capabilities. To justify a 256 bit ALU, you'd have to find an important enough problem that can really benefit from manipulating 256 bit words in single instructions. While you can probably contrive a few examples, there aren't enough of such problems that make the manufacturers feel they will ever get a return on the significant investment required to produce such a chip. If it there are niche but important (well-funded) problems that can really benefit from a wide ALU, then we would see very expensive highly targeted processors for that application. Their price, however, would prevent wide usage outside the narrow application that it was designed for. For example, if 256 bits made certain cryptography applications possible for the military, specialized 256 bit processors costing 100s to 1000s of dollars each would probably emerge. You wouldn't put one of these in a toaster, a power supply, or even a car though.

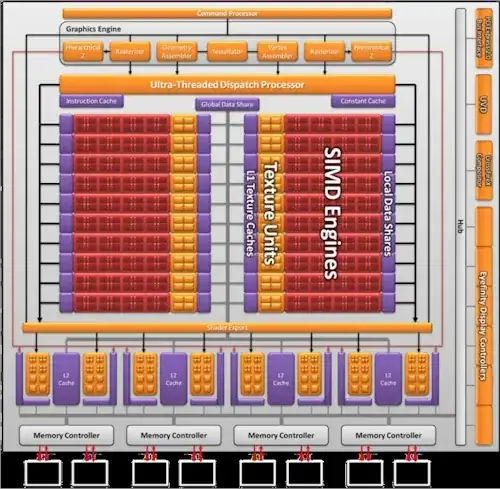

I should also be clear that the wide ALU doesn't just make the ALU more expensive, but other parts of the chip too. A 256 bit wide ALU also means there have to be 256 bit wide data paths. That alone would take a lot of silicon area. That data has to come from somewhere and go somewhere, so there would need to be registers, cache, other memory, etc, for the wide ALU to be used effectively.

Another point is that you can do any width arithmetic on any width processor. You can add a 32 bit memory word into another 32 bit memory word on a PIC 18 in 8 instructions, whereas you could do it on the same architecture scaled to 32 bits in only 2 instructions. The point is that a narrow ALU doesn't keep you from performing wide computations, only that the wide computations will take longer. It is therefore a question of speed, not capability. If you look at the spectrum of applications that need to use particular width numbers, you will see very very few require 256 bit words. The expense of accelerating just those few applications with hardware that won't help the others just isn't worth it and doesn't make a good investment for product development.