Over the years, I've come across websites and people with different opinions on the "correct" way to charge rechargeable batteries (I'm more concerned with laptop, tablet and phone batteries than with rechargeable AA or AAA batteries, if that makes a difference).

- Some (e.g. this one and some of my family members) claim that one should discharge all the way to 0% (or close to that) and charge all the way to 100% to prevent the battery from developing a "memory" (whatever that means; do they mean hysteresis?) and losing charge-storing capacity.

- Others (mainly Apple representatives and some of my family members) claim this was the case with older batteries only and newer batteries are "smarter" (whatever that means) than that and it's battery cycles that count now.

- Still others (e.g. this one, this one and this one) claim that the best way to charge a battery is to let it oscillate only between X% and Y% (with different values of X and Y depending on whom you ask, but all agree that X>0 and Y<100) because that somehow increases battery life by preventing overloading (no mention of what the lower limit is for).

None of those websites or people have ever explained why what they say is true is, in fact, true, so I thought I'd ask here.

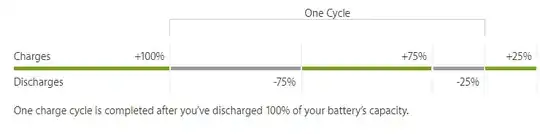

I'm aware of how battery cycles work:

I'm also aware that battery life/health decreases over time and it's just a process that can't be helped because entropy.

I'm also aware that battery life/health decreases over time and it's just a process that can't be helped because entropy.

My own experience with battery charging and health is varied (and I haven't performed nearly enough experiments to come up with a reasonable conclusion because I only have so much money to spend on electronics and so much time to spend draining and recharging batteries):

- My laptop (which is from mid-2014) spends most of its time permanently plugged in and at 100% battery. If I ask it what its health is, it says it's at 82% health, it's completed 609 cycles and its actual charge is 96%... which probably means health is not a linear measure of the scaling of the 0%–100% range as it goes down compared to the original 0%–100% range, but some more complicated quantity. When I do unplug it, the battery lasts a good while (haven't measured it and certainly haven't compared it to what it originally lasted).

- My old phone (an iPhone 6, so it's as old as whenever that came out, which is more recent than 2014) was charged whenever I thought the battery level was lower than I wanted it to be and I knew I wouldn't be able to charge it for several hours, and it often (but not always) got all the way to 100% and then remained plugged in for hours. If I ask it about its battery health, it says the maximum capacity is 73% and "[the] battery is signicantly degraded". The battery now lasts very little and drains very quickly; the phone will turn off (and claim it's run out of battery) when the indicator is anywhere from 1% to 53% — but this may be due to either age or charging habits, so it's inconclusive without more evidence.

- I can figure out the battery healths and explain the charging habits of my old tablet and my wife's tablet phones if necessary.

I'd like to settle this once and for all, please, preferably with an answer that includes physics. What's the best way to charge a battery, and why is that that best way?