You seem to be mixing different presentations and different domains.

"MAC" in this context means Multiply-Accumulate, also known as Fused Multiply and Add (FMA). It's indeed the core operation for highly-connected neural networks including fully-connected networks and CNN's. The other main operation is the non-linear operation done on the result of all those MAC's. But since that's often a ReLu these days, that operation is practically free. (essentially one gate delay)

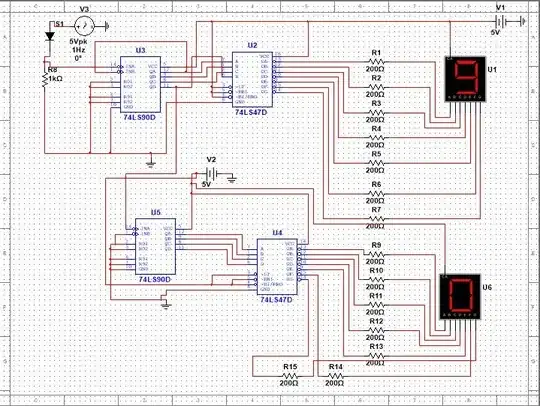

ALU (Arithmetic Logical Unit) is an outdated CPU concept. Modern CPU architectures don't have them anymore; the logical equivalent would be the FP Execution Units. They're definitely not connected to memory as in figure 1; they operate on registers. Memory wouldn't be able to keep up, eliminating the benefit of a dedicated MAC operation.

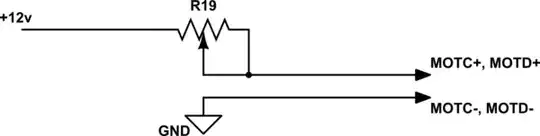

The second picture is a high-level overview of a new non-CPU architecture. This makes sense; you were discussing AI hardware and not general-purpose hardware such as CPU's.