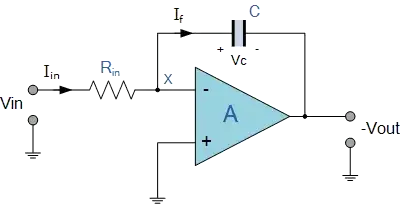

This is regarding an integrator op amp design, the rate at which the input voltage charges the integrator's feedback capacitor is 150V/s. I am trying to justify the minimum feedback capacitor value before the bias current (or whatever else) affects the minimum current too much! This is my understanding so far, the op amp that I am using has a bias current of 30pA.

$$\frac{dV_{\text{out}}}{dt} = \frac{i_B}{-C_f} = \frac{30\text{pA}}{1\text{nF}} = 30\text{mV/s}$$

The maximum output voltage of the integrator is 5V, so using 150V/s and 30mV/s. How can I determine how much the bias current is influencing the the output?

The way that I think of it is 150/30=5V and 30mV/30=1mV, so the bias current causes a drift of 1mV. Is this correct? I imagined it to cause worse errors than this.

The resistor at the input is 230kohm. and the input current is about 120nA but I am not sure if these are factored into the error.

I found online another equation for the error, which is including the resistor.

$$\text{Error} = \text{bias current} \times R$$

Is this factored in as well?