I’m new to radio communication and completely ignorant in the topic. I spent some time writing software which includes calculation of the channel capacity (in bits/second or symbols/second or bauds) for a line-of-sight radio link. There were several methods, and all of them depended linearly on the frequency bandwidth. The bandwidth and energy (SNR) were always the main constraint on a bit rate. All else being equal, if you need more speed—get more energy or more bandwidth. And reasons for more energy I can intuitively understand, but why more bandwidth?

What I understand about digital modulation is that we change some parameter (or parameters) every specified period of time (a timeframe) and thus transmit one symbol per timeframe. Variable parameters usually are amplitude, phase and frequency (ASK, PSK, FSK, APSK and so on). The number of distinguishable states provides more bits per symbol—this part is clear too. I view it as Morse code or communication via flashlight. In those two the faster you change the parameter, the faster the information flows. I can click my flashlight more vigorously and thus transmit faster provided the receiver can keep up with me. I would expect the constraints would come from the technical characteristics of the equipment such as, for example, the sensitivity of the receiver (how fast can it register the signal's state) and how fast the transmitter can emit those states.

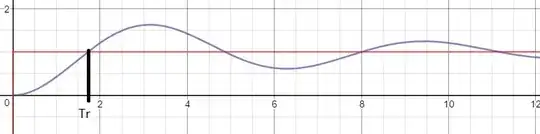

But here it states that “Nyquist determined that the number of independent pulses that could be put through a telegraph channel per unit time is limited to twice the bandwidth of the channel. In symbols, $${\displaystyle f_{p}\leq 2B}$$ where fp is the pulse frequency (in pulses per second) and B is the bandwidth (in hertz)”. So we can't puls faster than 20,000,000 times per second with a 10 MHz bandwidth, according to the Nyquist rate. How come? What's the physics of this?

Thank you very much in advance! Directions to an according literature would also work.