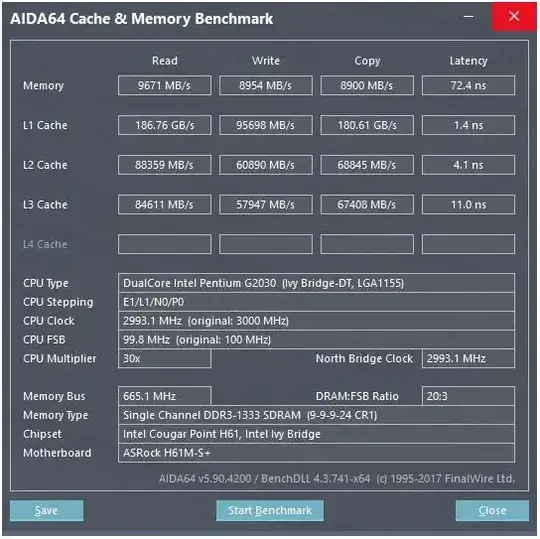

@peufeu's answer points out that these are system-wide aggregate bandwidths. L1 and L2 are private per-core caches in Intel Sandybridge-family, so the numbers are 2x what a single core can do. But that still leaves us with an impressively high bandwidth, and low latency.

L1D cache is built right into the CPU core, and is very tightly coupled with the load execution units (and the store buffer). Similarly, the L1I cache is right next to the instruction fetch/decode part of the core. (I actually haven't looked at a Sandybridge silicon floorplan, so this might not be literally true. The issue/rename part of the front-end is probably closer to the "L0" decoded uop cache, which saves power and has better bandwidth than the decoders.)

But with L1 cache, even if we could read at every cycle ...

Why stop there? Intel since Sandybridge and AMD since K8 can execute 2 loads per cycle. Multi-port caches and TLBs are a thing.

David Kanter's Sandybridge microarchitecture write-up has a nice diagram (which applies to your IvyBridge CPU as well):

(The "unified scheduler" holds ALU and memory uops waiting for their inputs to be ready, and/or waiting for their execution port. (e.g. vmovdqa ymm0, [rdi] decodes to a load uop that has to wait for rdi if a previous add rdi,32 hasn't executed yet, for example). Intel schedules uops to ports at issue/rename time. This diagram is only showing the execution ports for memory uops, but un-executed ALU uops compete for it, too. The issue/rename stage adds uops to the ROB and scheduler. They stay in the ROB until retirement, but in the scheduler only until dispatch to an execution port. (This is Intel terminology; other people use issue and dispatch differently)). AMD uses separate schedulers for integer / FP, but addressing modes always use integer registers

As that shows, there are only 2 AGU ports (address-generation units, which take an addressing mode like [rdi + rdx*4 + 1024] and produce a linear address). It can execute 2 memory ops per clock (of 128b / 16 bytes each), up to one of them being a store.

But it has a trick up its sleeve: SnB/IvB run 256b AVX loads/stores as a single uop that takes 2 cycles in a load/store port, but only needs the AGU in the first cycle. That lets a store-address uop run on the AGU on port 2/3 during that second cycle without losing any load throughput. So with AVX (which Intel Pentium/Celeron CPUs don't support :/), SnB/IvB can (in theory) sustain 2 loads and 1 store per cycle.

Your IvyBridge CPU is the die-shrink of Sandybridge (with some microarchitectural improvements, like mov-elimination, ERMSB (memcpy/memset), and next-page hardware prefetching). The generation after that (Haswell) doubled per-clock L1D bandwidth by widening the data paths from execution units to L1 from 128b to 256b so AVX 256b loads can sustain 2 per clock. It also added an extra store-AGU port for simple addressing modes.

Haswell/Skylake's peak throughput is 96 bytes loaded + stored per clock, but Intel's optimization manual suggests that Skylake's sustained average throughput (still assuming no L1D or TLB misses) is ~81B per cycle. (A scalar integer loop can sustain 2 loads + 1 store per clock according to my testing on SKL, executing 7 (unfused-domain) uops per clock from 4 fused-domain uops. But it slows down somewhat with 64-bit operands instead of 32-bit, so apparently there's some microarchitectural resource limit and it's not just an issue of scheduling store-address uops to port 2/3 and stealing cycles from loads.)

How do we calculate the throughput of a cache from its parameters?

You can't, unless the parameters include practical throughput numbers. As noted above, even Skylake's L1D can't quite keep up with its load/store execution units for 256b vectors. Although it's close, and it can for 32-bit integers. (It wouldn't make sense to have more load units than the cache had read ports, or vice versa. You'd just leave out hardware that could never be fully utilized. Note that L1D might have extra ports to send/receive lines to/from other cores, as well as for reads/writes from within the core.)

Just looking at data bus widths and clocks doesn't give you the whole story.

L2 and L3 (and memory) bandwidth can be limited by the number of outstanding misses that L1 or L2 can track. Bandwidth can't exceed latency * max_concurrency, and chips with higher latency L3 (like a many-core Xeon) have much less single-core L3 bandwidth than a dual/quad core CPU of the same microarchitecture. See the "latency-bound platforms" section of this SO answer. Sandybridge-family CPUs have 10 line-fill buffers to track L1D misses (also used by NT stores).

(The aggregate L3/memory bandwidth with many cores active is huge on a big Xeon, but single-threaded code sees worse bandwidth than on a quad core at the same clock speed because more cores means more stops on the ring bus, and thus higher latency L3.)

Cache latency

How is such a speed even achieved?

The 4 cycle load-use latency of L1D cache is impressive, but only applies to the special case of pointer chasing (when it's most important). In other cases it's 5 cycles which is still impressive considering that it has to start with an addressing mode like [rsi + rdi * 4 + 32], so it has to do address-generation before it even has a virtual address. Then it has to translate that to physical to check the cache tags for a match.

(See Is there a penalty when base+offset is in a different page than the base? for more about the [base + 0-2047] special case when the base reg comes from a previous load; it seems Intel optimistically probes the TLB based on the base address in parallel with the addition, and has to retry the uop in the load port if it doesn't work out. Great for list / tree nodes with pointers early in the node.

See also Intel's optimization manual, Sandybridge section 2.3.5.2 L1 DCache. This also assumes no segment override, and a segment base address of 0, which is normal; those could make it worse than 5 cycles)

The load port also has to probe the store buffer to see if the load overlaps with any earlier stores. And it has to figure this out even if an earlier (in program order) store-address uop hasn't executed yet, so the store-address isn't known (in that case it's dynamically predicted; mispredicts cause memory-order pipeline nukes). But presumably this can happen in parallel with checking for an L1D hit. If it turns out the L1D data wasn't needed because store-forwarding can provide the data from the store buffer, then that's no loss.

Intel uses VIPT (Virtually Indexed Physically Tagged) caches like almost everyone else, using the standard trick of having the cache small enough and with high enough associativity that it behaves like a PIPT cache (no aliasing) with the speed of VIPT (can index in parallel with the TLB virtual->physical lookup).

Intel's L1 caches are 32kiB, 8-way associative. The page size is 4kiB.

This means the "index" bits (which select which set of 8 ways can cache any given line) are all below the page offset; i.e. those address bits are the offset into a page, and are always the same in the virtual and physical address.

For more details about that and other details of why small / fast caches are useful/possible (and work well when paired with larger slower caches), see my answer on why is L1D smaller/faster than L2.

Small caches can do things that would be too power-expensive in larger caches, like fetch the data arrays from a set at the same time as fetching tags. So once a comparator finds which tag matches, it just has to mux one of the eight 64-byte cache lines that were already fetched from SRAM.

(It's not really that simple: Sandybridge / Ivybridge use a banked L1D cache, with eight banks of 16 byte chunks. You can get cache-bank conflicts if two accesses to the same bank in different cache lines try to execute in the same cycle. (There are 8 banks, so this can happen with addresses a multiple of 128 apart, i.e. 2 cache lines.)

IvyBridge also has no penalty for unaligned access as long as it doesn't cross a 64B cache-line boundary. I guess it figures out which bank(s) to fetch based on the low address bits, and sets up whatever shifting will need to happen to get the correct 1 to 16 bytes of data.

On cache-line splits, it's still only a single uop, but does multiple cache accesses. The penalty is still small, except on 4k-splits. Skylake makes even 4k splits fairly cheap, with latency about 11 cycles, same as a normal cache-line split with a complex addressing mode. But 4k-split throughput is significantly worse than cl-split non-split.

Sources: