I'm interested to sample an AC signal in the range of 5kHz - 20KHz with the STM32L432KC microcontroller. This microcontroller has a hardware oversampling feature, i.e it can take the average of several ADC samples without CPU overhead, increasing the number of bits.

The ADC runs at full speed (5.33 MSPS) when in oversampling mode. With an oversampling rate of x64 I should get 15bits of resolution at 83kS/s although the ADC is only 12bits.

My questions about designing the ADC driver are:

Should I design the ADC driver so that it can drive the ADC at 5.33MS/s although my signal is only 20kHz? I ask because the ADC is a SAR type and the internal capacitor needs to be charged quick enough.

Should I design the ADC driver for 15bits although the ADC only has 12bits?

EDIT:

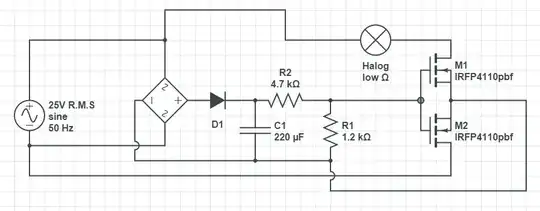

I attached a snippet from the datasheet + schematic of an ADC driver. My main question is how fast does the ADC driver need to be, the signal changes much slower than the ADC is sampling.