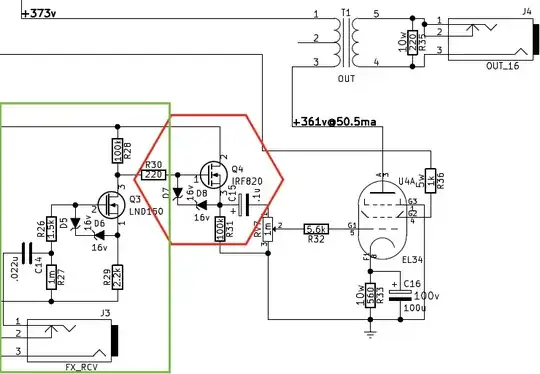

I'm learning as I go once again. Consider this output stage segment from a guitar amplifier I physically built:

The LND150 is an "depletion mode" MOSFET, whereas the IRF820 is a normal "enhacement mode" MOSFET. Their drains are connected to a 360vdc source (not shown).

The green section amplifies the line-level input from the FX_RCV to ~30vac. This is often called "Effects loop recovery" stage.

The red section forms a solid state "dc-coupled cathode follower" to be able to supply large amounts of current to the output stage. This helps reduce the tonality changes from using the RV7 master volume.

With guitar amplifiers, they sound incredible when the output stage is overdriven. There are several limits to how far you can push a stage though, one is blocking distortion. In this circuit, blocking distortion can be produced by the c15 capacitor discharging.

I'd like to eliminate the red section. Then, I'd like to have a transistor (or a pair of transistors) directly drive the grid on the output tube, without need coupling capacitors.

While I'm pretty new at analog design! I'm here asking this question because I don't know what I don't know! If I can get a bump in the right direction, that'd be very useful.

Thank you very much!

EDIT: The red section is actually in the amplifier to try and -prevent- blocking distortion, but eliminating the cap completely would be the most ideal solution!

EDIT: sstobbe pointed out I got my terminology messed up on enhancement/depletion mode