Today, electronic systems use constant voltage power source. Current consumption varies as power consumption varies. Why can't electronic systems be designed the other way round? Using constant current power source and varying the voltage as power consumption varies. What are the pros and cons of each approach? Why has current electronic systems evolve to use constant voltage power source?

-

1Voltage and time are only indirectly related, whereas current and time are directly related. – Ignacio Vazquez-Abrams Apr 09 '17 at 12:39

-

1Most circuits require a varying amount of current (e.g. audio amp) - much easier to supply a voltage and then take just the current required than a current (which would have to equal the maximum value required) and then have to dump the excess current not required as wasted power. Besides most sources of power are 'constant' voltage e.g. battery – JIm Dearden Apr 09 '17 at 12:41

-

2This is very closely related to your http://electronics.stackexchange.com/questions/298564/why-do-leds-require-a-constant-current-source-and-not-a-constant-voltage-source question - please don't ask a succession of similar questions. – TonyM Apr 09 '17 at 12:55

-

10Work through the examples yourself and it becomes clear enough. A CCS outlet must be shorted when unused or voltage will rise to infinite (or system max.) Multiple devices from a single output must be placed in series. Power will be lost on the feed circuits at all times at the same level regardless of load due to I^2R losses. If voltage is limited due to safety or other uses the CC must be increased for higher power ccts. While available outlet max power rises linearly with I, constant losses rise with I^2. | Overall it IS possible but gets nasty. – Russell McMahon Apr 09 '17 at 14:10

-

3@IgnacioVazquez-Abrams voltage and time are just as directly related as current and time if you work fully through to the dual system, ie inductance for capacitance, inductors charged with current are current sources and have a maximum volt.second product, just as capacitors charged with voltage are voltage sources and have a maximum current.second product. – Neil_UK Apr 09 '17 at 15:10

-

1For most practical electronic circuits, "changing the supply voltage" has the same effect as "injecting an unwanted signal into the circuit". So for any "circuit" more complicated than a fixed resistor, this is unlikely to work at all. – alephzero Apr 09 '17 at 16:17

-

1Then all loads sharing the same power supply needs to be connected in series? – user3528438 Apr 09 '17 at 18:18

-

1Why don't plumbing pipes supply constant volume and only vary the pressure? Firehose filling a bathtub, river filling a teacup, no problem! – Apr 09 '17 at 19:39

-

@JimDearden well that's because they were designed that way; the question is effectively asking why they were designed that way – user253751 Apr 10 '17 at 01:16

-

There is so much misinformation in some of these answers... – user253751 Apr 10 '17 at 01:19

-

While not an answer, it is sometimes instructive to compare electrical systems to water pipes, with the voltage analogous to the water pressure in the pipes, and current analogous to water flow. An ideal water system would have constant pressure, but how would a water system with constant flow even work? And what happens when you try to close the tap? (At least the analogy seems good to me, with my vaguely remembered 30 year old electro courses.) – Thomas Padron-McCarthy Apr 10 '17 at 06:26

-

You'd find it rather inconvenient if mains power was supplied as a constant-current source. To plug something in the wall socket, you'd first have to remove a strap shorting both live and neutral wires. But between the time you remove the strap and plug your device, everything else in your house would be unpowered. – dim Apr 10 '17 at 13:15

-

@dim You're applying constant-V thinking into constant-I situation. You FIRST plug in, THEN remove shunt. Also, "live" and "neutral" are voltage potential terms, not useful in series connection when the voltage jumps as loads vary their resistance. – Agent_L Apr 10 '17 at 14:28

7 Answers

While there's no reason why you couldn't design electronics with constant current sources, there's a few good reasons why we don't. Batteries and mains power are supplied more as constant voltage sources than constant current sources so it's simply more convenient to use what we have. The other reason is that a constant current source is always burning power. To shut a device off, you would have to short-circuit the power-supply. Since most wires have resistance, you'd constantly be wasting power.

- 12,738

- 22

- 45

-

7For the second part, I guess it all boils down to the fact that (almost) perfect insulators are cheaper and easier to come by than (almost) perfect conductors. – Sredni Vashtar Apr 10 '17 at 03:16

Most power sources are constant voltage, and not constant current. If you take the two main sources of electrical energy, which are batteries and rotating generators(regardless of size), the one thing in common is that their voltage is fixed theoretically to a certain value and can be controlled. For example, a standard AA dry-cell battery has a voltage of 1.5 V, which it will always produce more or less (disregarding real-life errors). The internal chemistry of most batteries relates the internal chemical reactions to the output voltage of the battery. Similarly the generator, for a given magnetic field strength (called excitation), and a given speed, will produce a fixed voltage at its terminals(again, only approximately due to real-life).

In almost any electricity-using device, in almost most cases, a voltage is the cause, and the current is the effect. Only when you apply a voltage to a device, may current start flowing through it (superconductors not-withstanding). Even constant current devices monitor the current and regulate the voltage as per the load. You never hear of a 3 V flashlight battery monitoring the voltage at its terminals. This is due to basic physics, in which change in movement of electrons (i.e. current) is possible when electric field (i.e. voltage) is applied.

- 328

- 2

- 8

-

5"In almost any electricity-using device, in almost most cases, a voltage is the cause, and the current is the effect." - because they were designed that way. The question is asking why they were designed that way. – user253751 Apr 10 '17 at 01:19

-

4Voltage and current are two faces of the same coin (even though it is sometimes easier to regard one as the cause and the other as the effect, there is no physical reason for that). Even in a battery - the exemplification of constant voltage source - once a current is flowing ("due to the voltage", the only way to maintain the voltage is to route charge back to the battery to re-estabilish the equilibrium between ions. So, that voltage is actually "due to the current". What is usually overlooked is the fact that each single little charge carries a field that would otherwise change the voltage. – Sredni Vashtar Apr 10 '17 at 03:29

-

@immibis I mentioned that in the first paragraph itself, and so did others. That the reason is that the sources we have are constant voltage, and the current produced depends on the load put on them. Current sources are hard to come by, and hard to make for power levels. So we are forced to work with constant voltage devices. Also as others have said, a constant current requires a superconductor when not in use to shunt that current. – Transistor Overlord Apr 10 '17 at 04:08

-

@SredniVashtar Electrons will not move as a whole through a resistor or any power consuming device for that matter. Instead an electric field (i.e. voltage) is needed to push them through to supply that power. That is what I meant by voltages are the cause and current is the effect – Transistor Overlord Apr 10 '17 at 04:15

-

2@TransistorOverlord, point is that voltages do not appear from nowhere. They are created by displacing charges. Two sides of the same coin. Related question: http://electronics.stackexchange.com/questions/201533/does-voltage-cause-current-or-does-current-cause-voltage – Sredni Vashtar Apr 10 '17 at 04:21

-

2@TransistorOverlord Indeed batteries are approximate constant-voltage devices and I've never heard of a constant-current battery. But I believe there are configurations of generators that are closer to constant-current than constant-voltage (which makes sense because motors are also inductors). Perhaps there are configurations in which chemical reactions can be made to generate a constant current too. – user253751 Apr 10 '17 at 04:25

-

2@TransistorOverlord A solar cell, for example, approximates both a constant current source (up to a certain voltage limit) *and* a constant voltage source (up to a certain current limit). (In practice you use an MPPT circuit to operate it in-between, and by altering the MPPT circuit you could get either a constant-current or constant-voltage output) – user253751 Apr 10 '17 at 04:47

-

@immibis A constant current MPPT-controlled solar cell, which supplies to real circuits, has to maintain a voltage across it, and has to monitor the current and voltage as well, bringing all the feedback, ICs and other control stuff related to it. A constant voltage doesn't need to do that if the circuit is well-produced and has one operating point. Although with modern technology and its demands and capabilities, constant voltage sources and loads will be a relic of the past except for the simplest uses – Transistor Overlord Apr 10 '17 at 15:25

-

@SredniVashtar I agree with you and the link mentioned. At the deepest level the cause-and-effect is not well-defined. However, I meant my original answer from the POV of the source and load concept, which is: to create a useful current in a load, you require a voltage from the source (i.e. electric field). – Transistor Overlord Apr 10 '17 at 15:28

-

@SredniVashtar Also this voltage produced by a source does not have to be due to charge flow. Back-emf in motors is an example. Also the charge-flow (when thinking about deeply) that does cause a voltage is not a useful flow of current from the load POV. It happens within the source. Only when the voltage is put in the circuit, does useful current flow – Transistor Overlord Apr 10 '17 at 16:05

-

2@TransistorOverlord, yes, I think we can agree on the fact that it is colloquially time-saving to say "the voltage causes the current" to mean "when I apply this voltage, a current develops in the circuit". I do it all the time. All I wanted is to point out the fallacy of thinking that physics relationships provide a cause-effect link, because - unless you can put time explicitly in - that is not the case. Also, I haven't give it much thought but it is likely that back emf generators can be explained with charge displacement too, via relativity. (but that's another story...) – Sredni Vashtar Apr 10 '17 at 16:24

-

@SredniVashtar I understand your reasoning, and my error of language. Also back-emf is ultimately due to magnetic fields, which itself comes from moving charges (which is a current after all). – Transistor Overlord Apr 10 '17 at 16:36

-

@TransistorOverlord 1) A constant voltage MPPT-controlled solar cell also has to do all that. Note that most switching converters can be adapted to either constant-voltage or constant-current by using an appropriate feedback mechanism. (You may note that a buck or boost converter with a fixed duty cycle has a constant-current output if the input is constant-current) – user253751 Apr 10 '17 at 22:52

-

@TransistorOverlord And I think everyone agrees that you need both charge-flow and voltage to supply power. There's no reason to conclude from that that the *voltage* must be constant and the *charge-flow* must vary - it also works for the charge-flow to be constant and the voltage to vary. – user253751 Apr 10 '17 at 22:52

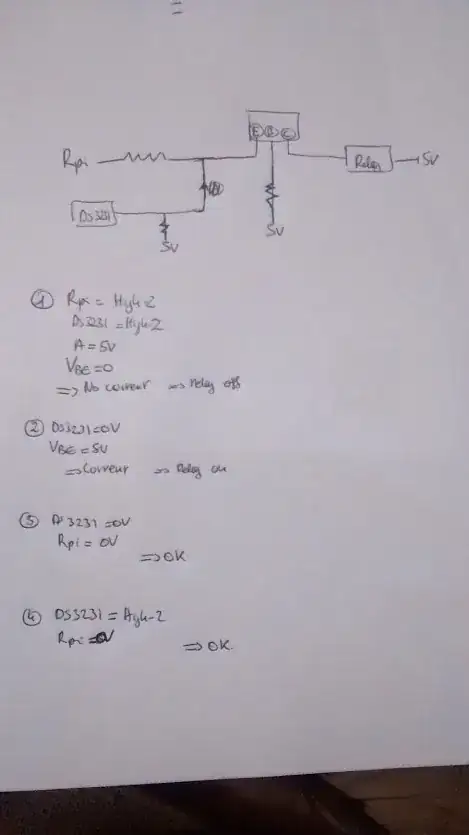

The constant voltage method provides the key to load-line design of amplifiers.

Given the vast need for amplifiers, the ability to provide stable gain for boosting long-distance phoneline signal strengths, the need for low distortion operation of vacuum tubes in those phoneline circuits, we may feel hobbled by the successes of BellLabs. But then Bell provided the transistor. Here is a load-line.

- 33,703

- 2

- 18

- 46

Such systems are in use in LED lighting applications (LEDs behave exactly like your question suggests natively), and they also were very relevant in the last decades of widespread vacuum tube technology (50s,60s,70s) - 300mA and 150mA series heater designs (which, however, were usually manually adjusted with resistors instead of using a proper regulated current source) were very common in consumer equipment.

- 3,085

- 9

- 13

You are thinking about voltage and current as a more closely related thing than they actually are. Voltage is a "static" potential. Current on the other hand is the motion of charges due to the effect of a voltage. For a given load in a circuit they are related by ohm's law, but they are physically different entities.

A voltage can, and does, exist when there is no current present. However, other than at absolute zero, a current can not exist without a voltage.

That is, Voltage is the primary object, current is the secondary.

Some circuits ARE designed to be powered by a constant current source. Many transducers like motors and LEDs are driven that way because their energy conversion characteristics are dependent on current, not voltage. However, it is important to note that constant current sources are controlled by voltage effects.

However, most electrical parts have characteristics that are fundamentally affected by voltage, especially capacitors and the conductance of semi-conductors. As such it is a lot easier to implement complex circuitry using a constant voltage supply and allow the current consumed to vary as it may.

Were you to try and build the same sort of circuitry using a constant current power supply, when one part of the system demands more power, it's resistance drops, the voltage would need to fall system wide to maintain the same current output from the regulator. This would change the references for all the remaining circuitry and affect how the capacitances and semiconductors behave in them. If it falls too low some semi-conductors would stop working.

As such, your constant current source would need to be designed to be at least your maximum system load.

Now you have the opposite problem. If that load dropped to a much lower level because you turned something off, your circuit resistance would be much larger, and so your system voltage would have to grow to a much larger value. All your components would need to be designed to deal with those larger voltages. If it goes too high your semiconductors again may suddenly stop working.

Further, if you had an ideal constant current source, as your load approaches a very high resistance, the voltage starts to approach infinitely. At which point physics takes over, insulators fail, and everything would self destruct. In reality what would actually happen would be the regulator would no longer be able to supply the current because it does not have the voltage to draw from to drive the output that high.

The alternative to that would be to not turn things on and off but to redirect it elsewhere instead, which, as well as being a waste of energy, is also extremely difficult to do while maintaining a constant total current. Further every little logic gate and amplifier would need do to the same thing.

In a constant voltage system each circuit contained within it is, to the most part, immune to what other parts of the system are doing. If a circuit on the right side of the board suddenly draws more current, and the regulator can supply it, and the board is designed correctly, the circuit on the left side is blissfully unaware of it. (Well almost.)

The bottom line is, in almost all cases, voltage, being the prime mover, is the thing that makes most sense to regulate and is a lot easier to control.

- 46,364

- 8

- 68

- 151

-

1And *you* are thinking about voltage and current as *less* closely related than they actually are. Most circuits can be converted into an equivalent circuit with current and voltage interchanged. – user253751 Apr 10 '17 at 01:17

-

@immibis no I mean, drawing more current does not mean you need more voltage as the OP's original question suggests. – Trevor_G Apr 10 '17 at 01:21

-

To get more power from a constant-current power supply, you don't draw more current (you can't, because the current is constant). Instead you develop more voltage, which increases the total power delivered since the current stays the same. – user253751 Apr 10 '17 at 01:23

-

-

1@Trevor, I believe you are not considering one aspect when you say "A voltage can exist without a current present". I.e. the fact that you must have displaced charge to produce that voltage in the first place. Also, if you are willing to consider perfect insulators (that allow for any value of V to be associated with the particular value I=0), you should also consider perfect conductors (that allow for any value of I to be associated with the particular value V=0). Granted, it is easier to think that a gradient of potential energy is causing a small mass to move in a give direction; but... – Sredni Vashtar Apr 10 '17 at 04:46

-

...but that mass carries a gravitational field that will change the potential profile (the "slope") if you do not carry it back. So, if you you want a definite potential to stay the same, you need to bring that mass back up the hill. Otherwise, atom by atom, you'll end up with a new planet. :-) – Sredni Vashtar Apr 10 '17 at 04:48

-

I think all of the problems mentioned in this answer have analogs in constant-voltage systems. Such as the fact that your power supply will fail if it can't supply the needed amount of power. – user253751 Apr 10 '17 at 04:49

-

@SredniVashtar you do not need to have displaced charge to have a voltage. Voltages displace charges...not the other way around. AS for perfect conductors, as I said other than at absolute zero. – Trevor_G Apr 10 '17 at 04:56

-

@immibis instead of criticizing everyone else's answers.... add your own. – Trevor_G Apr 10 '17 at 04:57

-

@Trevor, how do you produce voltage if not by displacing (or having displaced, or using a system where there has been displacement of) charge? You might want to start from the simplest possible charge configuration: one positive and one negative charge. Either they are already displaced or you need some means to displace them if you want to see a field, and a potential. – Sredni Vashtar Apr 10 '17 at 05:07

-

@SredniVashtar and how exactly do you displace the charge? With a voltage. Voltages can and do exists in the vacuum of space with no matter at all. If an atom happens to pass through that field it may ionize and the electron move to the plus side and the ion to the other, but it's presence is not required. – Trevor_G Apr 10 '17 at 05:10

-

@Trevor: "how do you displace a charge? with voltage". Well not necessarily but in an electrical system I'd say that that is my point: voltage causes current that causes voltage that causes current... It's a chicken and egg problem; that's why I say they are two sides of the same medal. As for voltages existing in empty space - you are not looking at the big picture: outside of that limited empty portion of space you are considering there must be the charge that is producing the field associated to your voltage. – Sredni Vashtar Apr 10 '17 at 05:19

-

@SredniVashtar Sorry mate, I'm not going to drag out this argument since you are obviously set in your ways. It you want to believe you need current to make voltages, I am sure you have some other explanation for how generators and transformers and radio antennas work too. But, like I said, I am too old and too tired to argue with you. – Trevor_G Apr 10 '17 at 05:32

-

No, I do not believe I need current to make voltages. You misread me. But you might want to add your answer explaining how voltage cause current here: http://electronics.stackexchange.com/questions/201533/does-voltage-cause-current-or-does-current-cause-voltage – Sredni Vashtar Apr 10 '17 at 05:36

-

-

@Agent_L I'm sure you have reason for your opinion and you are of course entitled to voice it. However, comments like that provide zero information other than, "I disagree" and as such are uninformative to the reader and pointless. – Trevor_G Apr 10 '17 at 15:23

Lets imagine two outlets on a common house plug, fed by a constant current source somewhere further up the line, lets assume it's a 10A constant current source. When not in use the outlet needs to be shorted such that the voltage is zero (lets assume ideal wires for now). When you plug something into one outlet, you now need to un-short it, and allow the voltage to rise - lets assume you have a 10V 10A -> 100W lightbulb plugged in, and the act of plugging it in moves the short-circuit system out of the outlet. Now what happens when you plug in a second 100W lightbulb (10A, 10V) - the lightbulb now needs to function at 5A 20V - it needs to lower it's own resistance - and so does the other one - but how much? well it depends on the other device that was plugged in - the two basically need to stabilize, which while possible, is a very complicated system for a device as basic as a lightbulb, not to mention the special socket that would be needed to short closed when all devices are removed. This sort of current-voltage balancing system would need to be done for every splitter, leading to some complicated systems that need to balance themselves.

Now, I was ignoring wire resistance, but lets bring it back - you will always be running your wires at their maximum (since wires are rated by amps running through them for the most part) - this is not ideal, as using something at 24x7 to it's maximum is generally not good for it's lifespan, so you will need to increase the size of all the wiring to support this. Beyond the wiring you will also need to be able to quickly and reliably switch at 10A currents - 10A is a fair amount of current, which ends up causing sparks / arcing, thus wearing out switches fairly quickly, which brings me to another point.

Right now most mains power is AC, in that it has a zero-crossing - but you won't be able to instantly change 10A of current into -10A due to wire (and device) inductance. Large appliances can adjust their "power on" time to the frequency of the mains power, such that their switches (or relays / mosfets / whatever) turn on when the voltage is at or near 0, in order to prevent surges into say a capacitor bank that then feeds the system during the next 0V cycle. While I won't say that this is impossible, it would be quite difficult at best.

- 550

- 5

- 24

-

2No, the two lightbulbs would be connected in series, and both would operate at 10A 10V (the supply from the outlet now being 10A 20V). – user253751 Apr 10 '17 at 01:17

-

You can't instantly change 10V of voltage into -10V due to wire (and device) capacitance, but that doesn't mean we don't use AC voltage. – user253751 Apr 10 '17 at 01:18

-

@immibis connecting the devices in series adds it's own complexity, meaning each device needs to handle the power of all other possible devices, or they go out like Christmas lights - I suppose there could be a sine wave of current, but with devices already modulating their resistance to get their desired power I am not sure how effective it would be – user2813274 Apr 10 '17 at 02:07

-

Yet you don't see a problem with a sine wave of voltage and devices modulating their resistance to get the desired power? – user253751 Apr 10 '17 at 02:09

-

And with a constant current supply, connecting devices in series is the *only* way to sanely connect multiple devices. Just like with a constant voltage supply you have to connect them in parallel. You've already alluded to this with the fact that powerpoints would have to be shorted when not in use. – user253751 Apr 10 '17 at 02:15

-

@immibis I disagree with connecting devices in series being the only way possible - I don't think either is a particularly great solution, but shorting an unused outlet is possible, and I think it's arguably better than having all the devices in series – user2813274 Apr 10 '17 at 02:49

-

I would like to see a circuit diagram of you could possibly short unused outlets without having all the outlets (and therefore their attached devices) in series. – user253751 Apr 10 '17 at 02:52

-

No, no, no. You plug in FIRST and THEN un-short the socket. Possibly all in one swift move due to clever construction of the socket. Then it works just like ours. Also, insulators in our installations work at 100% capacity (full voltage and leak current) 24/7/365 - no problems with that! – Agent_L Apr 10 '17 at 14:35

-

@immibis I was making a distinction between the power distribution units which would need to be connected in series to prevent extra wires everywhere (and also a the max rating) and the devices themselves, which wouldn't make sense to have a phone charger capable of running enough power through it to also run a microwave – user2813274 Apr 11 '17 at 05:00

-

@user2813274 Power doesn't run through things. Power is the product of current (which is through things) and voltage (which is across things). – user253751 Apr 11 '17 at 22:48

I think most other answers point out the real issue only rather tangentially. It would work but be rather thoroughly impractical for very basic reasons, even ignoring the whole problem of generation and distribution when the end-users would be constant-current loads with compliance of thousands of Volts for home users, and good fractions of a megaVolt for larger users such as shopping malls, etc.

Why can't electronic systems use constant current power source and vary the voltage as power consumption varies?

They can, but the reciprocal relationships between related quantities make it totally impractical for power distribution, even at the level of a house. Also: it solves no practical problems whatsoever. No improvement of any kind. In fact, even with the most careful design, the system would be extremely expensive to implement, even at the scale of a household.

Constant voltage power can supply multiple loads in parallel, like you do with typical household electrical wiring. When a load is turned off, the conductance drops to zero, and no more current flows. The capacity of a supply, at a constant voltage, is given in Amperes.

Constant current power supply would require all loads to be connected in series. When a load is turned off, the resistance drops to zero, and the current bypasses the load. The closed switch maintains a short on the input. The capacity of a supply, at a constant current, is given in Volts.

With constant-current supply, the house wiring would be one big series circuit, and each outlet would need to bypass the contacts with a short whenever the load was not plugged in. All loads would need to conduct the full current consumed by the household, since all the power switches turned into the "off" position would have to have zero (very low) resistance so as not to dissipate power.

Since the capacity is equivalent to maximum voltage, upgrading a house supply capacity would mean getting higher maximum voltage fed to the outlets. Let's say that a practical constant current for household use would be 15A. A rather standard 24kW household supply - US 240V/100A house service - would become a "medium" voltage 1.6kV supply. A 200A service for larger homes would take 3.2kV line voltage.

Also, the static idle dissipation of a household would be proportional to the number of outlets, and to the square of the current since all the wiring and outlets would have some resistance, and \$P=I^2/R\$.

So, if you'd "turn off" everything in the house, the electricity meter would not stop. To actually stop the electric consumption, you'd have to short the supply side of the electric meter.

The only "cool" aspect of such a supply, ignoring the insane risk of electrocution, would be that short circuits would be completely benign. A hard short circuit in an appliance would be equivalent to turning it off :)

Also, "fuses" in appliances would need to monitor both voltage and current. So, you'd have a regular 25A fuse, and behind it an equivalent of crowbar circuit that shorts the input in case the voltage is above a threshold. The crowbar would need to use a 25A, 5kV-rated relay to cover "household" power delivery ranges expected.

Now, the relays would need to have a very small interrupting capacity - and so would the fuses - since the supply is inherently current-limiting. But they'd need to support insane standoff voltages.

The tiny catastrophic protection fuse (or fusible resistor) in a phone charger would instead consist of a $50 fuse, and a $100 relay...

Don't know about you, but I wouldn't be happy to pay for this, even with all the "cool engineering beans" factor.

- 32,734

- 1

- 38

- 103