Let me simplify and expand my previous comments and connect the dots for those who seem to need it.

Is design still done at a (sub)logic gate level?

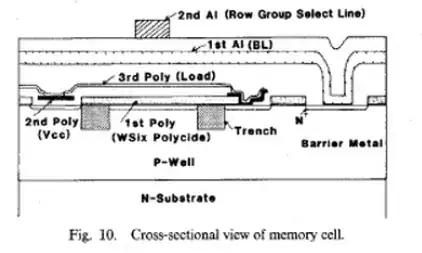

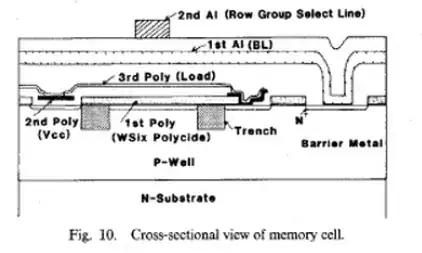

Design is done at many levels, the sub-logic level is always different. Each fabrication shrink demands the most brilliant physics, chemistry,and lithographic process experience as the structure of a transistor changes and geometry also changes to compensate for trade-offs, as it shrinks down to atomic levels and cost ~$billions each binary step down in size. To achieve 14nm geometry is a massive undertaking in R&D, process control & management and that is still an understatement!

For example the job skills required to do this include;

- "FET, cell, and block-level custom layouts, FUB-level floor plans, abstract view generation, RC extraction, and schematic-to-layout verification and debug using phases of physical design development including parasitic extraction, static timing, wire load models, clock generation, custom polygon editing, auto-place and route algorithms, floor planning, full-chip assembly, packaging, and verification."*

- is there not much innovation in that area anymore?

- WRONG

- There is significant and heavily funded innovation in Semiconductor Physics, judging by Moore's Law and the number of patents, it will never stop.The savings in power, heat and thus quadrupling in capability pays off each time.

- have we moved on to a higher level of abstraction?

- It never stopped moving.

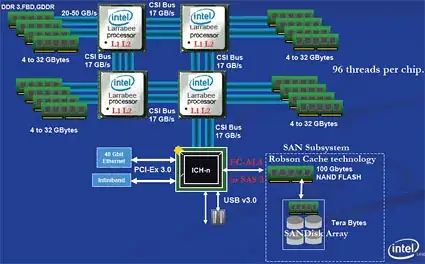

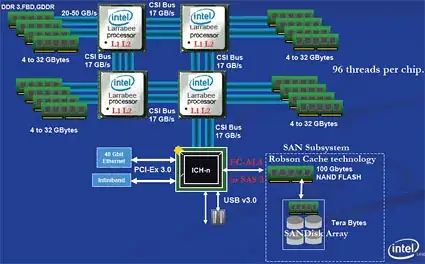

- With demand for more cores, doing more in one instruction like ARM RISC CPU's , more powerful embedded µC's or MCU's, smart RAM with DDR4 which has ECC by default and sectors like flash with priority bits for urgent memory fetches.

- The CPU evolution and architectural changes will never stop.

Let me give you a hint. Go do a job search at Intel, AMD, TI or AD for Engineers and see the job descriptions.

- Where do the designs for the billions of transistors come from?

- It came from adding more 64bit blocks of hardware. but now going nanotube failures, thinking has to change from the top down approach of blocks to the bottom up approach of nanotubes to make it work.

- Are they mostly auto generated by software? with tongue firmly planted in cheek...

Actually they are still extracting designs from Area51 from spaceships and have a way to go.... until we are fully nano-nano tube compliant. An engineer goes into the library and says nVidia we would like you to join us over here on this chip and becomes a part, which goes into a macro-blocks. The layout can be replicated like Ants in Toystory but explicit control over all connections must be manually routed /checked out as well as use DRC and auto-routing for comparison. Yes Automation Tools are constantly being upgraded to remove duplication and time wasted.

- is there still a lot of manual optimization?

- Considering one airline saved enough money to pay for your salary by removing just 1 olive from the dinner in First Class, Intel will be looking at ways to remove as many atoms as possible within the time-frame. Any excess capacitance means wasted heat, performance and oops also more noise, not so fast...

But really CPU's grow like Tokyo, its not overnight, but tens of millions live there now with steady improvement. I didn't learn how to design at Univ. but by reading and trying to understand how things work, I was able to get up to speed in industry pretty fast. I got 10 years experience in my 1st 5 yrs in Aerospace, Nuclear Instrument design, SCADA design, Process monitoring, Antenna design, Automated Weather station design and debug,OCXO's PLL's VLF Rx's, 2 way remote control of Black Brandt Rockets... and that was just my 1st job. I had no idea what I could do.

Don't worry about billions of transistors or be afraid of what to learn or how much you need to know. Just follow your passion and read trade journals in between your sleep, then you won't look so green on the job and it doesn't feel like work anymore.

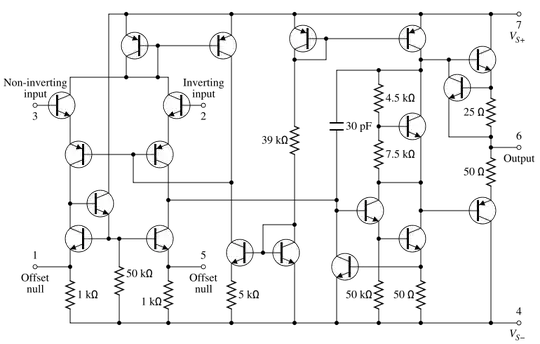

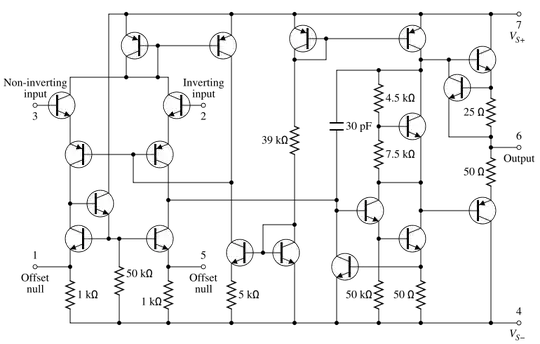

I remember having to design a 741 "like" Op Amp as part of an exam one time, in 20 minutes. I have never really used it, but I can recognize good from the great designs. But then it only had 20 transistors.

But how to design a CPU must start with a Spec., namely;

Why design a CPU and make measurable benchmarks to achieve such as;

- Macro instructions per second (MIPS) (more important than CPU clock),for example;

- Intel's Itanium chip is based on what they call an Explicitly Parallel Instruction Computing (EPIC) design.

- Transmeta patented CPU design with very long instruction word code morphing microprocessors (VLIWCMM). They sued Intel in 2006, closed shop and settled for ~$200million in 2007.

- Performance per watt (PPW) , when power costs > cost of chip (for servers)

- FLoating point Ops Per Second (FLOPS) for math performance.

There are many more metrics, but never base a CPU's design quality on its GHz speed (see myth)

So what tools de jour are needed to design CPU's? The list would not fit on this page from atomic level physics design to dynamic mesh EMC physical EM/RF design to Front End Design Verification Test Engineer, where skills required include;

- Front-end RTL Simulation

- knowledge of IA and computer architecture and system level design

- Logic verification and logic simulation using either VHDL or Verilog.

- Object-oriented programming and various CPU, bus/interconnect, coherency protocols.