You'll have to make some assumptions. A typical assumption is that the dragging speed of the user doesn't change very abruptly in the middle of a bar code.

Since barcodes contain regions of fixed, known width, it's easy to calculate the dragging speed based on that. Your algorithm just waits for the first strong "bright-dark-bright" transitions, and bases its timing on that.

For example, consider the ubiquitous EAN-13 barcodes (really, read the excellent wikipedia article from which this picture was taken):

They begin and end with two constant-width stripes. So you watch out for "bright-dark-bright" first, measure the duration of this "dark", then you expect the "bright" and the next "dark" to be as long as the first dark, and if you find that, ie. a equidistant "dark-bright-dark", you've

- found the beginning of an EAN barcode, and

- found out the "timing" of a thin stripe at the same time.

Based on the length of that "dark", you can then adjust your signal interpretation. Even slow microcontrollers will be able to do that; these barcodes were designed for 1970's electronics, after all, and they became popular when they were trivial to decode using 1980's electronics...

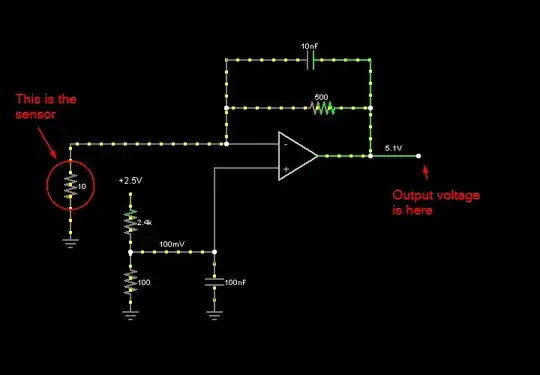

A note on the usage of an ADC: yes, if you have a microcontroller that has an ADC, feel free to use it. You don't have to – classically, barcode readers are implement using analog high-pass filters that only detect the edges between bright and dark (and then emit a 1 whenever there's such an edge) – you'd have to adjust your algorithms, but by reducing your analog input to a binary input, you potentially save a lot of CPU power.