Are built-in ADC converters in MCUs as reliable and accurate as their standalone counterparts?

Why shouldn't they? You know, every device you buy has their specs, and they will strive to follow them, because if they don't, they risk their company's good name or worse.

… comment by ${some Person on the Internet}

I ${ranting about something} as far as I ${totally unverifiable problem}

Well. Yes. There's someone on the internet voicing a negative opinion without backing it with facts. That's not really new[citation needed] – but anyway, I think it's safe to simply ignore this.

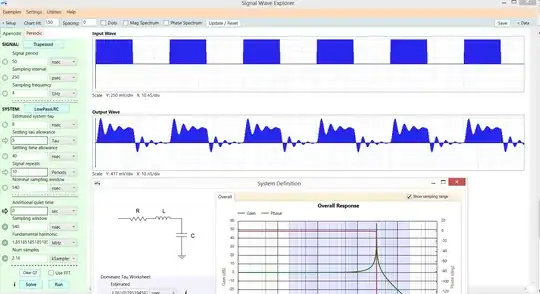

Look at whatever you want to digitize. Make sure you properly filter it. How high is your noise after that? You don't really have to have an ADC that can do better than the dynamic range between that average noise amplitude (\$\sigma\$ for things that follow central Gaussian distributions) and maximum signal amplitude. Figure out that dynamic range, and then figure out the Effective Number of Bits you need (ENOB). Often, your requirements are even lower – the bathroom scale example is very good: To be honest, a bathroom scale more accurate than 200g is senseless – you simply can't tell the difference whether a person drank a glass of water or gained weight. Let total range be 1 kg – 200 kg, then you need but a resolution of \$\frac 1{1000}\$ of the maximum value – in other words, a 10 bit converter will happily do. People still tend to buy the devices that say "\$\pm 10\text{ g}\$", so you'd need another factor of 20 to make marketing happy – that's about 4.5 bits. So, with an ADC that has 14 bits, you'd be better than any customer needs, and marketing wouldn't even have to lie¹.

Note your signal's bandwidth, and derive a minimum sampling rate from that (typically \$f_\text{sample} > 2\cdot f_{bandwidth}\$).

Your ADC's datasheet, be it a built-in one, or be it a separate one, will clearly state what it can do. It will state an ENOB or noise power, and it will clearly state the raw number of bits you get (which is > ENOB due to the noise in an ADC).

Don't let trolls on the internet troll you. The market has a clear indication for you: There's so many engineers that wanted to have ADCs integrated in their microcontroller, that basically every microcontroller at least has a variant with a built-in ADC. That might mean having those is actually useful.

Sure, they're not designed to give you 14 effective bits at 200MS/s, or 20 bits with a bias current of 10pA at 1MS/s – but that's not your use case, is it?

¹ they usually don't give a damn about that, though, for hard-to-verify senseless features in consumer products. Buy a consumer stereo and read the claimed sound output power. Use at full volume until batteries are empty. Patent that technology of glorious energy creation as perpetuum mobile.

Marketing is basically the opposite of that random person you quoted: They go out and make

positive remarks unbased on any factual thing, but on a random aspect of the system they don't really understand.