There is no cure for metastability. Period. End of.

Metastability is a problem in clocked systems when the output of a latch whose input timing constraints have been violated gets read twice. This means either in two different places, or at two different times. With a good signal, these two reads will be identical. With metastability, there's a possibility the two reads will be different.

For instance, the interrupt syncroniser of a uP, 'has an interrupt occurred?' The external interrupt signal is async, so will eventually violate tsu or th. The 'there was an interrupt this cycle' signal gets read in two places. If the program counter thinks there was an interrupt, and the interrupt controller thinks there wasn't, crash!

While there is no cure for metastability, it can be controlled. Any flip flop will be settling with a small time constant, which in a well designed system is small fraction of the clock period. For a particular violation, let's say there is 100% of the output becoming metastable. After 1 time constant, there is a 1/e chance of it still being metastable, 36%. After 10 time constants, it's only 36/million. That may not sound too much, but with a 10Mhz clock, that's 360 failing events per second, far too many. After 20 time constants, it's about 1/billion. One fail every 100 seconds. I wouldn't want to use a PC that crashed that often.

Now we can get any number of time constants by slowing our clock down. However, that impacts system speed. We can buy special 'metastable hard' flip flops, that have much shorter time constants than regular flip flops.

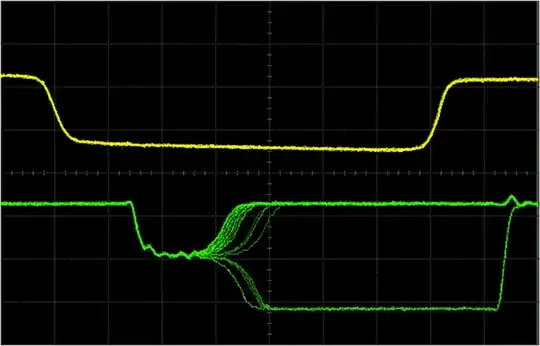

The real win comes when we use two or more flip flops in a row. This increases the signal latency in a system, but it allows us to keep the clock rate up. Do I really care if the external interrupt signal takes two or 3 clock cycles to cause the program jump? Not at all.

So let's say we have a flip flop with 15 time constants per clock cycle. That stands a 0.2/million chance of failure for each metastable event. If I get 1000 interrupts per second from my disk drive, then I might get several crashes a day. Still not very good. Now I cascade two flip flops, and get 30 time constants. I now have 40e-15 chance of failure. This system is unlikely to fail in my lifetime. But wait, say I'm the manufacturer, and have 100 million of these systems in the field. Now I cascade three flip flops, and my expected failure rate drops to 8e-21. I would not expect any failure from any system in my lifetime.

Note the failure rate is still finite, it's not zero. It is however very very unlikely.

Picture taken from W. J. Dally,

Picture taken from W. J. Dally,