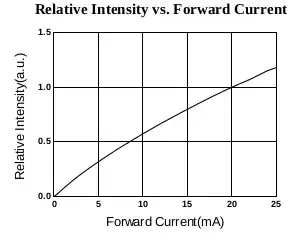

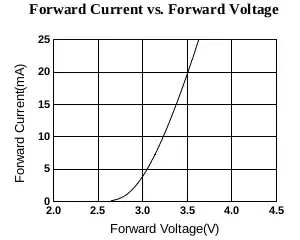

The brightness of an LED depends primarily on the current flowing through it.

A conventional incandescent bulb is effectively a resistor, it follows ohms law the V = I * R. If you double the voltage the current will double and the power used will go up by a factor of 4 (not quite true, there are some temperature related effects but close enough for now).

An LED on the other hand is a diode, like most diodes it has a a relatively fixed forward bias voltage. Below that voltage no current flows, above that voltage current flow is unlimited but the voltage is reduced by the bias voltage. (This is a massive simplification but is good enough for most rough calculations)

What this voltage is will depend upon the materials used and so will be colour dependent. Typically ~1.8-2V for red, yellow or green, ~3V for blue, white or "true green".

This voltage drop will increase with current but only by 0.1-0.2V, you can normally ignore this effect.

As you indicated in your question LEDs are typically connected with a resistor in series to limit the current. Why?

Think of the LED as a fixed voltage drop, it will use up a fixed amount of voltage no matter the current. So if you connect a 2V LED directly to a 3V source there will be 1V left to be dropped over the rest of the circuit. The rest of the circuit in this case will be the internal resistances in the power supply and wires. These resistances typically fairly low (so low you normally ignore them) and so a large current will flow.

Assuming the resistances are in the region of 0.1 omhs this would give a current of I = V/R = (3-2) / 0.1 = 10 amps.

The power dissipated in the LED would be P = I * V = 10 * 2 = 20 watts.

This would very rapidly heat the LED to the point where it is destroyed. The real world is a little more complex since the LED isn't the perfect zero resistance fixed voltage drop assumed but the end result is the same either way.

If we add a series resistor of 100 ohms in addition to the internal resistances then the current is reduced to 10mA and the LED glows nicely.

Changing the resistor value will change the brightness, most small LEDs are limited to about 20mA max and aren't visible much below 1mA. Generally going much over 10mA is hardly noticeable (this is more due to the way eyes work than the way LEDs work). You can also change the brightness by switching them on and off very quickly, this is simpler for digital systems to do and is generally more efficient for a given perceived brightness (again more due to eyes than LEDs), this allows you to change the brightness while only having a single fixed resistor in the hardware. If you are planning on using a variable resistor to set the brightness then it's good practice to also include a small fixed value so that with the variable resistor at 0 the current is limited to 20mA.

So what if we add two LEDs in series?

Each LED needs 2V to turn on. Two LEDs means 4V. With a 3V source we don't have sufficient voltage to forward bias the diodes and so they will block all current flow. The LEDs will be off.

If you increase the voltage and set the current limiting resistor correctly then they will both turn on. Since brightness depends upon the current through the LED and they will both have the same current they will be the same brightness (for the same type of LED).

What if we add two LEDs in parrallel?

If we add two in parallel each with their own resistor then they are effectively separate circuits. Assuming the power supply is sufficient each will act as if it's the only one.

If they share the resistor then things get more interesting. In theory this would work fine, you'd need to halve the resistor value to give the same per LED current but other than that you'd expect it to work.

Unfortunately no two LEDs are identical, they will all have very slightly different bias voltages which means that more current will flow through one than the other (it would be all the current through one if it wasn't for the small increase in voltage as current increases that we normally ignore).

This means that two LEDs in parallel with a single resistor will almost never be the same brightness.

Generally anything which needs to drive a group of LEDs (e.g. a backlight) will use a long series chain of LEDs and boost the voltage up as high as needed (within reason) so that they are all the same brightness.