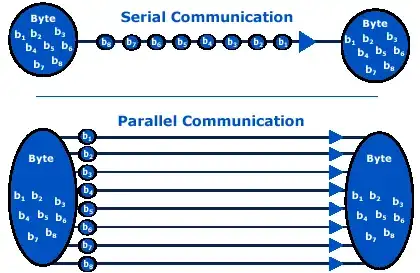

The problem is keeping the signals on a parallel bus clean and in sync at the target.

With serial "all you have to do", is be able to is extract the clock and as a result the data. You can help by creating lots of transitions, 8b/10b, bi-phase or manchester encoding, there are lots of schemes (yes this means you are adding even more bits). Yes, absolutely that one serial interface has to run N times faster than an N wide parallel bus in order to be "faster" but a long time ago now we reached that point.

Interestingly we now have parallel serial buses, your pcie, your ethernet (okay if you run 40GigE is 4 × 10 gig lanes 100Gig is 10 × 10 gig or the new thing coming is 4 × 25 gig lanes). Each of these lanes is an independent serial interface taking advantage of the "serial speed", but the overall transmission of data is split up load balanced down the separate serial interfaces, then combined as needed on the other side.

Obviously one serial interface can go no faster than one bit lane of a parallel bus all other things held constant. The key is with speed, routing, cables, connectors, etc keeping the bits parallel and meeting setup and hold times at the far end is the problem. You can easily run N times faster using one serial interface. Then there is the real estate from pins to pcboard to connectors. Recently there is a movement from instead of moving up from 10 gig ethernet to 40 gig using 4 × 10 gig lanes, to 25 gig per lane so one 25 gig pair or two 25 gig pairs to get 50 gig rather than four 10 gig pairs. Costing half-ish the copper or fiber in the cables and elsewhere. That marginal cost in server farms was enough to abandon the traditional path for industry standards and go off and whip one up on the side and roll it out in a hurry.

Pcie likewise, started with one or more serial interfaces with the data load balanced. Still uses serial lanes with the data load balanced an rejoined, the speeds increase each generation per serial interface rather than adding more and more serial pairs.

SATA is the serial version of PATA which is a direct decendent to IDE, not that serial was faster just that it is far easier to sync up with and extract a serial stream than it is to keep N parallel bits in sync from one end to the next. And remains easier to transmit and extract even if the serial stream is per bit lane 16, 32, 64, or more times faster than the parallel.