I need to drive a 12m strip of APA102 RGB LEDs (144 per meter). I use a Raspberry Pi for this Job.

This LED driver IC (APA102) takes an SPI signal and hands it to the next LED in the series and so on. You can find the datasheet here with the circuit used on the strips I use shown on the last page: https://cdn-shop.adafruit.com/datasheets/APA102.pdf

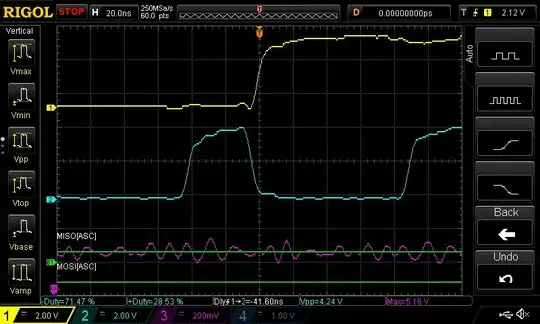

I need to drive this LED strip at a rather high clock of 8MHz+ to get my desired frame rate. This is working fine until I hit about 10m. Then the LEDs start to show wrong colors, start to flicker or just do nothing at all. The setup starts working again if I lower the clock to 4 Mhz but this is too slow for my application.

I need to use 5V PSUs with insane amount of current (30A for this one strip, a larger setup with strips in parallel will use multiple 120A PSUs) and they show some horrible noise (200-300mV) on the 5v output.

So I was wondering if there is anything I can do to improve my signal integrity?

I have a guess that the noise of the power supply slowly degrades the signal as its "refreshed" everytime it goes through a LED. Atleast thats what I gather from the schematic in the datasheet. Attaching a 220uF cap to the power input on the LED strip improves the noise but it doesn't seem to have any affect on the signal degradation.

I understand that this is a bit of a ridiculous setup but I unfortunately don't have any other choice than to use this type of LEDs.

Edit: I feed power into the strip every meter, connecting directly to the PSU with 14AWG wire. So its probably not a power issue.

This is how clock (blue) and data (yellow) look on the scope after just ~60 LEDs.

This is after ~1500 LEDs. Purple is the 5V line.