Transformers - these would prefer both a lower frequency and a higher frequency. A lower frequency would mean eddy current losses in the laminates reduces. A higher frequency means fewer primary turns because primary inductive reactance increases and this means less copper loss under load.

So is 50 Hz the Goldilocks value for power transformers - no because other parts of the world use 60 Hz. Is 100 Hz too high - probably on the verge of being too high for power transformer cost effectiveness.

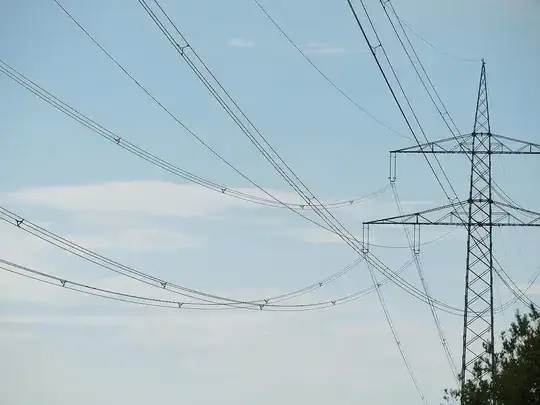

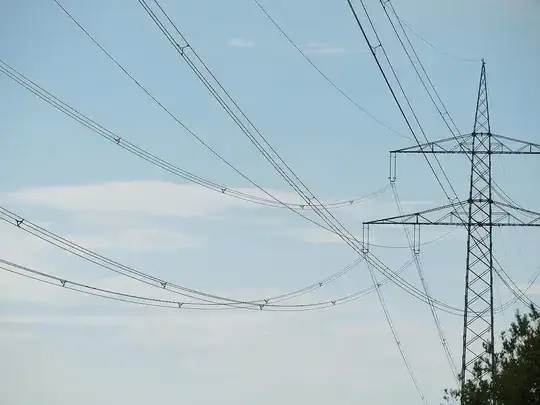

What about induced voltages from those overhead cables. The higher the frequency the greater the voltage induced in other objects and this in turn can cause problems. Faraday basically said this: -

So for a given current in an overhead conductor (for instance) the rate of change of flux would increase proportional to frequency and induced emf would increase in objects placed close by. Would this cause a problem at (say) 100 Hz. Maybe it would - maybe AC motors would suffer with increased losses in ironwork due to induced emfs causing eddy current flow. I guess this is pretty much related to laminate eddy currents in transformers.

Skin effect in conductors carrying high current is also of significant importance. Wiki shows a picture of overhead cable like this: -

Note the bundles of conductors formed into a triangle. Wiki say: -

The 3-wire bundles in this power transmission installation act as a

single conductor. A single wire using the same amount of metal per

kilometer would have higher losses due to the skin effect.

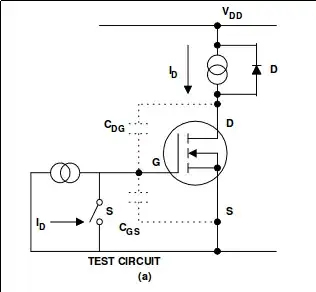

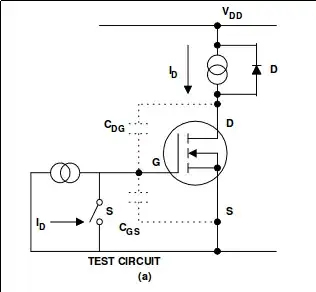

Increasing the frequency increases the skin effect and this will reduce the power delivery capability of feed cables because AC current tends to want to flow in the outer part of a conductor: -

How much will higher frequencies affect copper losses: -

So, if frequency doubles the AC resistance increases by \$\sqrt2\$.