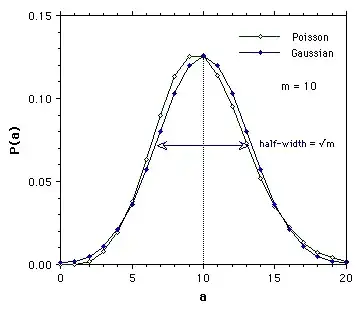

The measurement of peak to peak voltage on a noise source is poorly defined. In theory, the noise voltage does not have an upper bound, though big peaks become exponentially less likely to occur as they get bigger.

That's why we use rms, to indicate the power, which is much more consistent. However, rms is not an easy measurement to make without the correct equipment.

A measurement that was popular in the bad old days was 'tangential sensitivity' (google). This was measured with an oscilloscope, and a square wave generator. The amplitude of the square wave was adjusted, until a line could be drawn through the bottom of the noise on the +ve parts of the waveform, and the top of the noise on the -ve parts. Obviously this was subject to the characteristics of the 'scope, the chosen sweep rate, and the intuition of the operator.

Any attempt to measure the peak directly with a peak detector suffers the same problem that the response of the peak detector influences the measurement, fast peaks will not be caught by a slow detector.

A mathematically better measure is arrived at through the CCDF, though this also needs the correct equipment to make the measurement. This records the amount of time the signal spends above any given level. This is much used now that digital communication is the norm, as it's a good predictor of how many bits will be lost to noise. For instance, for guassian noise, IIRC, the noise signal spends in the ballpark of 1e-6 of its time above +11dB(rms), and 1% of its time above +5dB(rms) (don't use those figures without checking!)