Fourier Transform:

$$ X(j 2 \pi f) = \mathscr{F}\Big\{x(t)\Big\} \triangleq \int\limits_{-\infty}^{+\infty} x(t) \ e^{-j 2 \pi f t} \ \text{d}t $$

Inverse Fourier Transform:

$$ x(t) = \mathscr{F}^{-1}\Big\{X(j 2 \pi f)\Big\} = \int\limits_{-\infty}^{+\infty} X(j 2 \pi f) \ e^{j 2 \pi f t} \ \text{d}f $$

Rectangular pulse function:

$$ \operatorname{rect}(u) \triangleq \begin{cases}

0 & \mbox{if } |u| > \frac{1}{2} \\

1 & \mbox{if } |u| < \frac{1}{2} \\

\end{cases} $$

"Sinc" function ("sinus cardinalis"):

$$ \operatorname{sinc}(v) \triangleq \begin{cases}

1 & \mbox{if } v = 0 \\

\frac{\sin(\pi v)}{\pi v} & \mbox{if } v \ne 0 \\

\end{cases} $$

Define sampling frequency, \$ f_\text{s} \triangleq \frac{1}{T} \$ as the reciprocal of the sampling period \$T\$.

Note that:

$$ \mathscr{F}\Big\{\operatorname{rect}\left( \tfrac{t}{T} \right) \Big\} = T \ \operatorname{sinc}(fT) = \frac{1}{f_\text{s}} \ \operatorname{sinc}\left( \frac{f}{f_\text{s}} \right)$$

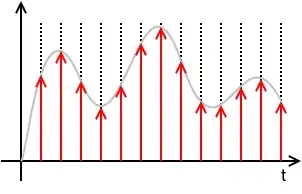

Dirac comb (a.k.a. "sampling function" a.k.a. "Sha function"):

$$ \operatorname{III}_T(t) \triangleq \sum\limits_{n=-\infty}^{+\infty} \delta(t - nT) $$

Dirac comb is periodic with period \$T\$. Fourier series:

$$ \operatorname{III}_T(t) = \sum\limits_{k=-\infty}^{+\infty} \frac{1}{T} e^{j 2 \pi k f_\text{s} t} $$

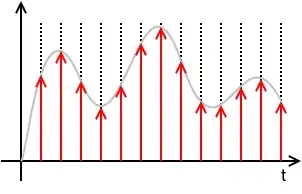

Sampled continuous-time signal:

$$ \begin{align}

x_\text{s}(t) & = x(t) \cdot \left( T \cdot \operatorname{III}_T(t) \right) \\

& = x(t) \cdot \left( T \cdot \sum\limits_{n=-\infty}^{+\infty} \delta(t - nT) \right) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x(t) \ \delta(t - nT) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x(nT) \ \delta(t - nT) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ \delta(t - nT) \\

\end{align} $$

where \$ x[n] \triangleq x(nT) \$.

This means that \$x_\text{s}(t)\$ is defined solely by the samples \$x[n]\$ and the sampling period \$T\$ and totally loses any information of the values of \$x(t)\$ for times in between sampling instances. \$x[n]\$ is a discrete sequence of numbers and is a sorta DSP shorthand notation for \$x_n\$. While it is true that \$x_\text{s}(t) = 0\$ for \$ nT < t < (n+1)T \$, the value of \$x[n]\$ for any \$n\$ not an integer is undefined.

N.B.: The discrete signal \$x[n]\$ and all discrete-time operations on it, like the \$\mathcal{Z}\$-Transform, the Discrete-Time Fourier Transform (DTFT), the Discrete Fourier Transform (DFT), are "agnostic" regarding the sampling frequency or the sampling period \$T\$. Once you're in the discrete-time \$x[n]\$ domain, you do not know (or care) about \$T\$. It is only with the Nyquist-Shannon Sampling and Reconstruction Theorem that \$x[n]\$ and \$T\$ are put together.

The Fourier Transform of \$x_\text{s}(t)\$ is

$$ \begin{align}

X_\text{s}(j 2 \pi f) \triangleq \mathscr{F}\{ x_\text{s}(t) \} & = \mathscr{F}\left\{x(t) \cdot \left( T \cdot \operatorname{III}_T(t) \right) \right\} \\

& = \mathscr{F}\left\{x(t) \cdot \left( T \cdot \sum\limits_{k=-\infty}^{+\infty} \frac{1}{T} e^{j 2 \pi k f_\text{s} t} \right) \right\} \\

& = \mathscr{F}\left\{ \sum\limits_{k=-\infty}^{+\infty} x(t) \ e^{j 2 \pi k f_\text{s} t} \right\} \\

& = \sum\limits_{k=-\infty}^{+\infty} \mathscr{F}\left\{ x(t) \ e^{j 2 \pi k f_\text{s} t} \right\} \\

& = \sum\limits_{k=-\infty}^{+\infty} X\left(j 2 \pi (f - k f_\text{s})\right) \\

\end{align} $$

Important note about scaling: The sampling function \$ T \cdot \operatorname{III}_T(t) \$ and the sampled signal \$x_\text{s}(t)\$ has a factor of \$T\$ that you will not see in nearly all textbooks. That is a pedagogical mistake of the authors of these of these textbooks for multiple (related) reasons:

- First, leaving out the \$T\$ changes the dimension of the sampled signal \$x_\text{s}(t)\$ from the dimension of the signal getting sampled \$x(t)\$.

- That \$T\$ factor will be needed somewhere in the signal chain. These textbooks that leave it out of the sampling function end up putting it into the reconstruction part of the Sampling Theorem, usually as the passband gain of the reconstruction filter. That is dimensionally confusing. Someone might reasonably ask: "How do I design a brickwall LPF with passband gain of \$T\$?"

- As will be seen below, leaving the \$T\$ out here results in a similar scaling error for the net transfer function and net frequency response of the Zero-order Hold (ZOH). All textbooks on digital (and hybrid) control systems that I have seen make this mistake and it is a serious pedagogical error.

Note that the DTFT of \$x[n]\$ and the Fourier Transform of the sampled signal \$x_\text{s}(t)\$ are, with proper scaling, virtually identical:

DTFT:

$$ \begin{align}

X_\mathsf{DTFT}(\omega) & \triangleq \mathcal{Z}\{x[n]\} \Bigg|_{z=e^{j\omega}} \\

& = X_\mathcal{Z}(e^{j\omega}) \\

& = \sum\limits_{n=-\infty}^{+\infty} x[n] \ e^{-j \omega n} \\

\end{align} $$

It can be shown that

$$ X_\mathsf{DTFT}(\omega) = X_\mathcal{Z}(e^{j\omega}) = \frac{1}{T} X_\text{s}(j 2 \pi f)\Bigg|_{f=\frac{\omega}{2 \pi T}} $$

The above math is true whether \$x(t)\$ is "properly sampled" or not. \$x(t)\$ is "properly sampled" if \$x(t)\$ can be fully recovered from the samples \$x[n]\$ and knowledge of the sampling rate or sampling period. The Sampling Theorem tells us what is necessary to recover or reconstruct \$x(t)\$ from \$x[n]\$ and \$T\$.

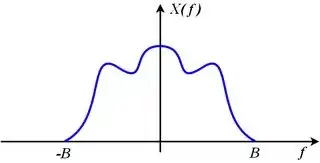

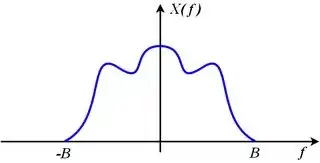

If \$x(t)\$ is bandlimited to some bandlimit \$B\$, that means

$$ X(j 2 \pi f) = 0 \quad \quad \text{for all} \quad |f| > B $$

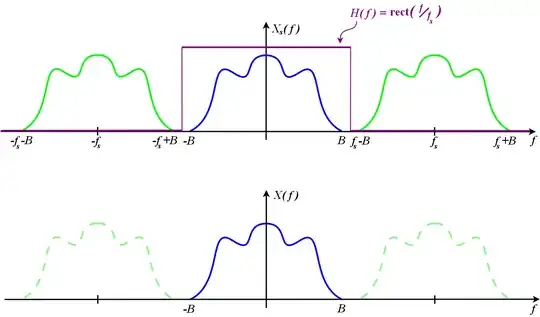

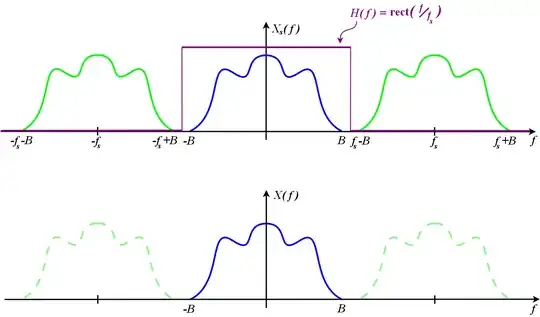

Consider the spectrum of the sampled signal made up of shifted images of the original:

$$ X_\text{s}(j 2 \pi f) = \sum\limits_{k=-\infty}^{+\infty} X\left(j 2 \pi (f - k f_\text{s})\right) $$

The original spectrum \$X(j 2 \pi f)\$ can be recovered from the sampled spectrum \$X_\text{s}(j 2 \pi f)\$ if none of the shifted images, \$X\left(j 2 \pi (f - k f_\text{s})\right)\$, overlap their adjacent neighbors. This means that the right edge of the \$k\$-th image (which is \$X\left(j 2 \pi (f - k f_\text{s})\right)\$) must be entirely to the left of the left edge of the (\$k+1\$)-th image (which is \$X\left(j 2 \pi (f - (k+1) f_\text{s})\right)\$). Restated mathematically,

$$ k f_\text{s} + B < (k+1) f_\text{s} - B $$

which is equivalent to

$$ f_\text{s} > 2B $$

If we sample at a sampling rate that exceeds twice the bandwidth, none of the images overlap, the original spectrum, \$X(j 2 \pi f)\$, which is the image where \$k=0\$ can be extracted from \$X_\text{s}(j 2 \pi f)\$ with a brickwall low-pass filter that keeps the original image (where \$k=0\$) unscaled and discards all of the other images. That means it multiplies the original image by 1 and multiplies all of the other images by 0.

$$ \begin{align}

X(j 2 \pi f) & = \operatorname{rect}\left( \frac{f}{f_\text{s}} \right) \cdot X_\text{s}(j 2 \pi f) \\

& = H(j 2 \pi f) \ X_\text{s}(j 2 \pi f) \\

\end{align} $$

The reconstruction filter is

$$ H(j 2 \pi f) = \operatorname{rect}\left( \frac{f}{f_\text{s}} \right) $$

and has acausal impulse response:

$$ h(t) = \mathscr{F}^{-1} \{H(j 2 \pi f)\} = f_\text{s} \operatorname{sinc}(f_\text{s}t) $$

This filtering operation, expressed as multiplication in the frequency domain is equivalent to convolution in the time domain:

$$ \begin{align}

x(t) & = h(t) \circledast x_\text{s}(t) \\

& = h(t) \circledast T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ \delta(t-nT) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ (h(t) \circledast \delta(t-nT) ) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ h(t-nT)) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ \left(f_\text{s} \operatorname{sinc}(f_\text{s}(t-nT)) \right) \\

& = \sum\limits_{n=-\infty}^{+\infty} x[n] \ \operatorname{sinc}(f_\text{s}(t-nT)) \\

& = \sum\limits_{n=-\infty}^{+\infty} x[n] \ \operatorname{sinc}\left( \frac{t-nT}{T}\right) \\

\end{align} $$

That spells out explicitly how the original \$x(t)\$ is reconstructed from the samples \$x[n]\$ and knowledge of the sampling rate or sampling period.

So what is output from a practical Digital-to-Analog Converter (DAC) is neither

$$ \sum\limits_{n=-\infty}^{+\infty} x[n] \ \operatorname{sinc}\left( \frac{t-nT}{T}\right) $$

which needs no additional treatment to recover \$x(t)\$, nor

$$ x_\text{s}(t) = \sum\limits_{n=-\infty}^{+\infty} x[n] \ T \delta(t-nT) $$

which, with an ideal brickwall LPF recovers \$x(t)\$ by isolating and retaining the baseband image and discarding all of the other images.

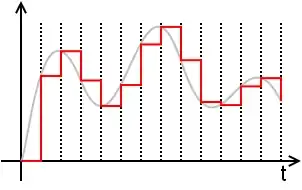

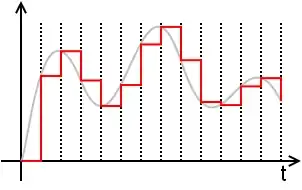

What comes out of a conventional DAC, if there is no processing or scaling done to the digitized signal, is the value \$x[n]\$ held at a constant value until the next sample is to be output. This results in a piecewise-constant function:

$$ x_\text{DAC}(t) = \sum\limits_{n=-\infty}^{+\infty} x[n] \ \operatorname{rect}\left(\frac{t-nT - \frac{T}{2}}{T} \right) $$

Note the delay of \$\frac{1}{2}\$ sample period applied to the \$\operatorname{rect}(\cdot)\$ function. This makes it causal. It means simply that

$$ x_\text{DAC}(t) = x[n] = x(nT) \quad \quad \text{when} \quad nT \le t < (n+1)T $$

Stated differently

$$ x_\text{DAC}(t) = x[n] = x(nT) \quad \quad \text{for} \quad n = \operatorname{floor}\left( \frac{t}{T} \right)$$

where \$\operatorname{floor}(u) = \lfloor u \rfloor\$ is the floor function, defined to be the largest integer not exceeding \$u\$.

This DAC output is directly modeled as a linear time-invariant system (LTI) or filter that accepts the ideally sampled signal \$x_\text{s}(t)\$ and for each impulse in the ideally sampled signal, outputs this impulse response:

$$ h_\text{ZOH}(t) = \frac{1}{T} \operatorname{rect}\left(\frac{t - \frac{T}{2}}{T} \right) $$

Plugging in to check this...

$$ \begin{align}

x_\text{DAC}(t) & = h_\text{ZOH}(t) \circledast x_\text{s}(t) \\

& = h_\text{ZOH}(t) \circledast T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ \delta(t-nT) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ (h_\text{ZOH}(t) \circledast \delta(t-nT) ) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ h_\text{ZOH}(t-nT)) \\

& = T \ \sum\limits_{n=-\infty}^{+\infty} x[n] \ \frac{1}{T} \operatorname{rect}\left(\frac{t - nT - \frac{T}{2}}{T} \right) \\

& = \sum\limits_{n=-\infty}^{+\infty} x[n] \ \operatorname{rect}\left(\frac{t - nT - \frac{T}{2}}{T} \right) \\

\end{align} $$

The DAC output \$x_\text{DAC}(t)\$, as the output of an LTI system with impulse response \$h_\text{ZOH}(t)\$ agrees with the piecewise constant construction above. And the input to this LTI system is the sampled signal \$x_\text{s}(t)\$ judiciously scaled so that the baseband image of \$x_\text{s}(t)\$ is exactly the same as the spectrum of the original signal being sampled \$x(t)\$. That is

$$ X(j 2 \pi f) = X_\text{s}(j 2 \pi f) \quad \quad \text{for} \quad -\frac{f_\text{s}}{2} < f < +\frac{f_\text{s}}{2} $$

The original signal spectrum is the same as the sampled spectrum, but with all images, that had appeared due to sampling, discarded.

The transfer function of this LTI system, which we call the Zero-order hold (ZOH), is the Laplace Transform of the impulse response:

$$ \begin{align}

H_\text{ZOH}(s) & = \mathscr{L} \{ h_\text{ZOH}(t) \} \\

& \triangleq \int\limits_{-\infty}^{+\infty} h_\text{ZOH}(t) \ e^{-s t} \ \text{d}t \\

& = \int\limits_{-\infty}^{+\infty} \frac{1}{T} \operatorname{rect}\left(\frac{t - \frac{T}{2}}{T} \right) \ e^{-s t} \ \text{d}t \\

& = \int\limits_0^T \frac{1}{T} \ e^{-s t} \ \text{d}t \\

& = \frac{1}{T} \quad \frac{1}{-s}e^{-s t}\Bigg|_0^T \\

& = \frac{1-e^{-sT}}{sT} \\

\end{align}$$

The frequency response is obtained by substituting \$ j 2 \pi f \rightarrow s \$

$$ \begin{align}

H_\text{ZOH}(j 2 \pi f) & = \frac{1-e^{-j2\pi fT}}{j2\pi fT} \\

& = e^{-j\pi fT} \frac{e^{j\pi fT}-e^{-j\pi fT}}{j2\pi fT} \\

& = e^{-j\pi fT} \frac{\sin(\pi fT)}{\pi fT} \\

& = e^{-j\pi fT} \operatorname{sinc}(fT) \\

& = e^{-j\pi fT} \operatorname{sinc}\left(\frac{f}{f_\text{s}}\right) \\

\end{align}$$

This indicates a linear phase filter with constant delay of one-half sample period, \$\frac{T}{2}\$, and with gain that decreases as frequency \$f\$ increases. This is a mild low-pass filter effect. At DC, \$f=0\$, the gain is 0 dB and at Nyquist, \$f=\frac{f_\text{s}}{2}\$ the gain is -3.9224 dB. So the baseband image has some of the high frequency components reduced a little.

As with the sampled signal \$x_\text{s}(t)\$, there are images in sampled signal \$x_\text{DAC}(t)\$ at integer multiples of the sampling frequency, but those images are significantly reduced in amplitude (compared to the baseband image) because \$|H_\text{ZOH}(j 2 \pi f)|\$ passes through zero when \$f = k\cdot f_\text{s}\$ for integer \$k\$ that is not 0, which is right in the middle of those images.

Concluding:

The Zero-order hold (ZOH) is a linear time-invariant model of the signal reconstruction done by a practical Digital-to-Analog converter (DAC) that holds the output constant at the sample value, \$x[n]\$, until updated by the next sample \$x[n+1]\$.

Contrary to the common misconception, the ZOH has nothing to do with the sample-and-hold circuit (S/H) one might find preceding an Analog-to-Digital converter (ADC). As long as the DAC holds the output to a constant value over each sampling period, it doesn't matter if the ADC has a S/H or not, the ZOH effect remains. If the DAC outputs something other than the piecewise-constant output (such as a sequence of narrow pulses intended to approximate dirac impulses) depicted above as \$x_\text{DAC}(t)\$, then the ZOH effect is not present (something else is, instead) whether there is a S/H circuit preceding the ADC or not.

The net transfer function of the ZOH is $$ H_\text{ZOH}(s) = \frac{1-e^{-sT}}{sT} $$ and the net frequency response of the ZOH is $$ H_\text{ZOH}(j 2 \pi f) = e^{-j\pi fT} \operatorname{sinc}(fT) $$ Many textbooks leave out the \$T\$ factor in the denominator of the transfer function and that is a mistake.

The ZOH reduces the images of the sampled signal \$x_\text{s}(t)\$ significantly, but does not eliminate them. To eliminate the images, one needs a good low-pass filter as before. Brickwall LPFs are an idealization. A practical LPF may also attenuate the baseband image (that we want to keep) at high frequencies, and that attenuation must be accounted for as with the attenuation that results from the ZOH (which is less than 3.9224 dB attenuation). The ZOH also delays the signal by one-half sample period, which may have to be taken in consideration (along with the delay of the anti-imaging LPF), particularly if the ZOH is in a feedback loop.