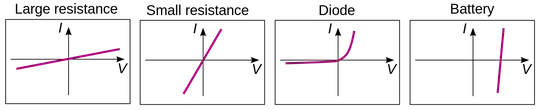

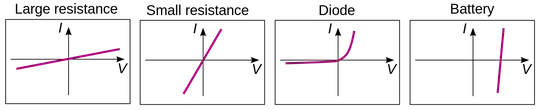

You will find your answer in the characteristic curves of the devices in question.

Consider the characteristic curves of the resistive loads. Current increases linearly with voltage. This means for any applied voltage, a certain proportional current will flow through the device. Lamps are approximately resistive loads so they behave this way. The important thing to know is that an increase in voltage produces a proportional increase in current.

So, your question a, if you increase the voltage to a resistive load by a factor of \$110\$, the current will also increase by a factor of \$110\$. Since \$P=IV\$, the power will increase by a factor of \$110^2=12100\$. This will more than likely destroy your 2V lamp. Resistive loads don't "draw what they want". They draw current that is proportional to the applied voltage.

Now, consider the curve for the diode. The current through the diode is an exponential of the voltage across it. It means that an increase in the voltage across the diode produces an exponentially larger increase in current. In practice, the exponential is so steep that any voltage over \$\approx0.6V\$ (for silicon) will produce a huge current.

In theory you could run an LED without any type of resistor ballasting. You'd need to control the applied voltage very precisely, though. Even a small deviation in the applied voltage will create large changes in current due to the steepness of the exponential curve.

For example, the 1N4001 diode begins to conduct at \$\approx0.6V\$. At \$1V\$, current is around \$2A\$. At \$1.4V\$ current is over \$10A\$. And this is in a device that has a maximum current of only \$1A\$!

So, unlike a resistive load, controlling the current through a diode or LED by using the voltage is not a very good idea. A better way is to control the current through the LED or diode to be what you want. A very simple way to do this is to use a resistor.

Regarding your part c, connecting three \$2V\$ LEDs in series to a \$12V\$ supply. The purpose of the resistor in that circuit is to limit the current, not the voltage. Consider the following circuit which I think is what you had in mind:

simulate this circuit – Schematic created using CircuitLab

Notice that after each LED, the voltage drops by exactly one diode drop (which is in this case \$2V\$). Since there are 3 LEDs, this causes \$6V\$ to fall across the resistor. Then, you need only choose the value of the resistor so the current through it is what you want. The resistor doesn't lower the voltage to the LEDs, it's resistance limits the current.