I need to power many 100w 32-36V LEDs... and building loads of 32V PSUs is costly and time consuming.

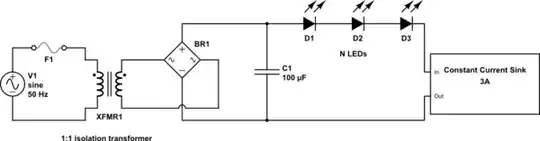

This is a bit of a crazy idea, and I'm aware that it's probably not the safest way to do this, but this circuit has just popped into my head:

The tiny detail that I'm not quite sure about is the DC voltage after the rectifier:

- Should I calculate using 240V, so 7 LEDs connected in series across 240V would give 34.2V per LED.

- Or, should I calculate using the peak, non-RMS voltage 340v, so 10 LEDs connected in series across 340V would give 34V per LED.

N.B. I'm aware the picture shows 8 LEDs, but it's just to visualise what I'm talking about.

If anybody has any other quick, easy, safer ways to do this then please let me know!