In theory, an ideal silicon diode may have a voltage drop of 0.7V. But it's difficult, if not impossible, to make all real-life diodes with the same part number, with exactly the same voltage drop. So all parts are accompanied by a data sheet, such as this one, which typically spells out the minimum, typical, and maximum values for a particular parameter.

Note in this table, there are no typical values given. And for the 1N4148 (a very common diode), there is only a maximum, and no minimum as there are for some of the others.

Plus the value is only shown for a particular current, namely 10 mA.

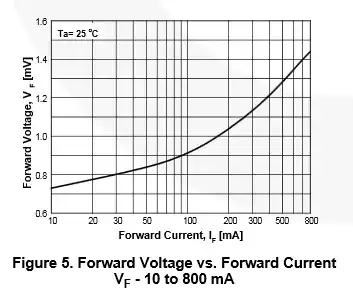

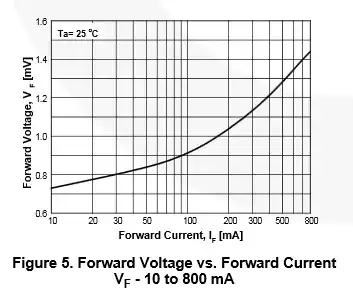

What about other current levels? That is where the graphs come in. Datasheets are typically filled with graphs. Here is one that expands on the forward voltage vs. forward current:

Unlike the table, which specified a maximum forward voltage at 10 mA, the graphs usually shows the typical value. So at 10 mA, the typical forward voltage is 720 mV, not 1V. At 800 mA, the voltage rises above 1.4V -- twice the typical value associated with silicon diodes.

Electrical engineers use these worst case values, either the minimum or maximum, combined with other minimums and maximums from other data sheets of other parts used in the circuit, to compute the worst case behavior of a circuit ad make sure it follows within their design specifications.

Sometimes the value of a component can be off quite a bit, and it doesn't make any difference. For example, some engineers use 4.99K pullup resistors, and others use 10K. Both will work. So you don't really need a precise value -- you could use a 20% part (if they still existed). However just about everyone uses 1% resistors nowadays for everything because the difference in price between 1% and 5% resistors is practically nil (typically $0.0002 -- 2/100 of a cent -- in production quantities).

Worst case minimum and maximum values don't just apply to analog circuits -- they apply to digital ones as well. One important parameter is the minimum high voltage output by a gate representing a logic 1. It must be higher than the maximum input voltage recognized as a 1 at any gates it is connected with. This not a problem within the same logic family (they are designed to work together), but can be an issue when mixing logic families.

Another parameter that must be considered in logic circuits is the propagation delay, or how fast a signal propagates within the gate. It is usually specified in ns.