Like the current answers by Spehro and Dave state, the limiting factor is by the heat that is generated by the current.

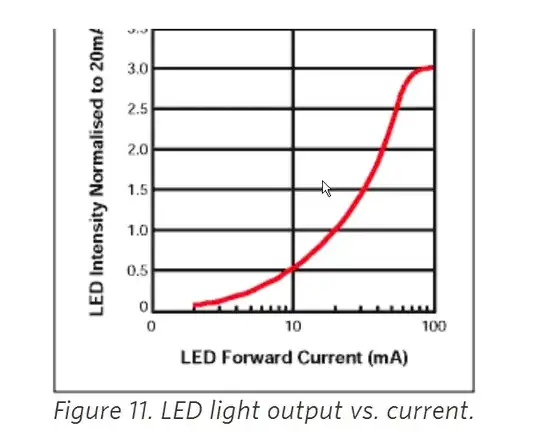

As the current increases, light output increases, but as current becomes high the junction of the LED becomes hot. The hotter the junction, the less efficient the LED becomes. Thus you reach a point where increasing the current actually decreases light output simply because the LED becomes less efficient at turning electricity into light.

It is common practice to increase efficiency of an LED by cooling it via heatsinks. (Also referred to by few as "heat plates" as some popular LEDs come pre-mounted on copper laden PCBs.)

To get the best light output / current ratio out of an LED set-up the general practice is to use more than one LED for the purpose and under-driving it. By actually using less current per LED you are rewarded more efficiency, however this is at the cost of using more LEDs in any given design.

LEDs can also have more current pulsed through them compared to having constant current. This is used to great effect in some stage lighting equipment as well as other products that use high-intensity strobing effects such as this Rescue Beacon.

Overall an LED is limited in intensity by the amount of heat it generates.