In terms of behaviour of the input protection circuit, you have three choices:

Clamp - voltage goes too high and is held at the clamp voltage, which is equal to or less than the max voltage input for the ADC.

Some kind of limiter design which uses an amplifier and reduces the gain of the amplifier to ensure that its output does not exceed the ADC input voltage, as the input voltage increases.

Input disconnection circuit. This option has been covered by another poster so I won't cover it here.

The problem with option 2 is that the signal that you want to measure is passing through the amplifier and therefore being subjected to modification by the varying gain.

In theory, you could use option 2 if you activated the variable gain facility when the signal of interest got very close to the max input of the ADC, so that for most of the input voltage range of the ADC, the gain of the variable gain amp would be \$1 (V_{out} = V_{in})\$.

You'd have to use a comparator circuit to trigger the variable gain feature, and the gain of an op-amp circuit (a simple inverting op-amp is: gain = \$-R_f/R_{in}\$), so you'd have to have a FET or other transistor in the feedback path of the operational amplifier to allow the value of \$R_f\$ (expressed in ohms) to change.

For example: design a comparator using an op-amp (easy enough) to trigger at say, 4.9 volts the FET in the feedback path of an amplifier switch different values of resistance into the feedback path of the op-amp, so from 0 - 4.9 volts, the gain of the amplifier is 1 and the input signal passes unchanged to the ADC input.

In theory this can be done, but to be honest, it's getting complicated.

I reckon you'd need 3 operational amplifiers, one for the comparator, one for the variable gain amplifier, and possibly a further one to invert the signal so it's the right way up for input into the ADC. This kind of approach is used in the audio business to limit the output level so that as an input audio level goes high, the gain of the amplifier circuit reduces to ensure the output doesn't go too high.

Option 1: Clamp

Diodes and Zeners are often used to protect the logic inputs on digital chips from the high voltage static electricity.

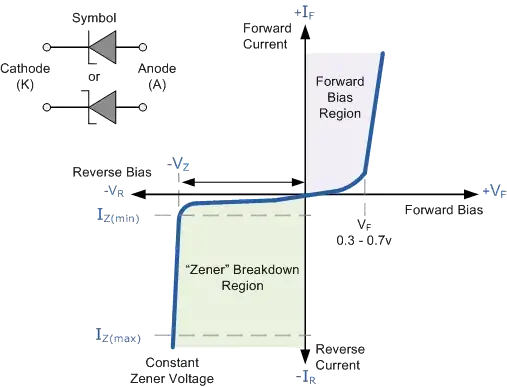

I'm not sure what circuits you are looking at that you think modify the input voltage. A Zener diode is just a diode that's been designed to break down at low voltage (when a diode is reverse-biased).

You might need a resistor in series with the Zener to limit the current, but both the resistor and Zener are arranged in parallel across the input to the ADC.

Zeners come in standard voltages, so you might find a Zener that breaks down at say 4.6 volts, a bit less than 5 volts.

When the input to the ADC is less than 4.6 volts, the zener is not operational. Its resistance is very high; almost no current is drawn.

Vin---------------> Vadc...... ADC input

|

R

| ------ Vz

|

Z

|

---------------- OV

Under this circumstance \$V_{adc} = V_{in}\$, Z has a very high resistance.

(Which puzzles me as to why you think such a circuit affects the \$V_{in}\$, the input signal. If you're dealing with high frequencies, then capacitance of the Zener might come into play and affect the signal, but I'm assuming you're not dealing with signals that high in frequency.)

Now when \$V_{in}\$ goes too high, say 4.7 volts (you need 4.6 across the Zener, and say allow a bit of voltage across the resistor), the Zener will break down and conduct, ensuring that only 4.6 volts is across the Zener, regardless of how high the \$V_{in}\$ goes. \$V_{in}\$ goes to 5 volts and higher, but the Zener is conducting and holding \$V_{out}\$ at 4.6~4.7.

The Zener is conducting, so current is now passing through it, but the resistor R limits that current to prevent a) burn out of the device and b) prevent whatever is supplying the signal \$V_{in}\$, from burning out its output stage.

When the Zener is conducting, you will need a bit of current through it, its operating current in order for it to break down at its specified breakdown voltage. Some Zeners require a few milliamps, but lower operating current Zeners can be found that operate at much lower current. Good selection of Zener and correct calculation of the R value are important to ensure that a) the Zener breaks down at the correct voltage and not a higher voltage (because the operating current is too low), b) you limit the current to a safe value for both the Zener and output of the previous stage that is supplying \$V_{in}\$.

I think you need to look at the Zener circuits again. Can you explain why you think there's a voltage loss - I understand accuracy of the \$V_{in}\$ to the ADC is important.

see that , from -Vz to Vf it is not linear, and i thing it must have an effect on the input singal for ADC

see that , from -Vz to Vf it is not linear, and i thing it must have an effect on the input singal for ADC