Phase is just a way of describing time differences in periodic signals and events. Remember that, from a mathematical standpoint, a true periodic signal is eternal:

$$f(t + T) = f(t), \ \ \ -\infty < t < \infty$$

If you have two (ideal) sinusoidal signals, it doesn't make sense to say that one happens before or after the other. Neither of them really "happens" at all -- they're not distinct events, they're spread out over all time. This is most obvious in a Fourier series, where there's no time variable at all, yet the signal is still completely defined.

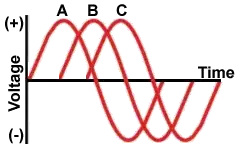

Since absolute time is meaningless for periodic signals, the only thing that matters is relative time between two signals, which we call phase. But a relative time longer than the period doesn't really make sense, since \$\Delta t \pm T = \Delta t\$. So the relative time has to be a number between zero and the period, regardless of what the period is. Instead of messing around with physical units of time, it's more convenient to measure time in fractions of a period. For various historical and mathematical reasons, we've settled on two common fractions:

$$\frac{T}{360} \to 1^\circ$$

$$\frac{T}{2 \pi} \to 1\ \mathrm{radian}$$

So why is this useful? In electricity, it often happens that we have a power source that produces a sinusoidal voltage. Sinusoids are related to rotation, so you can get them from a generator rotating at a constant speed, for instance. Phase comes in in two places:

In capacitive and inductive devices, the voltage and current have the same frequency but different phases. In a circuit with several such devices, the voltages at different nodes can be out of phase with each other.

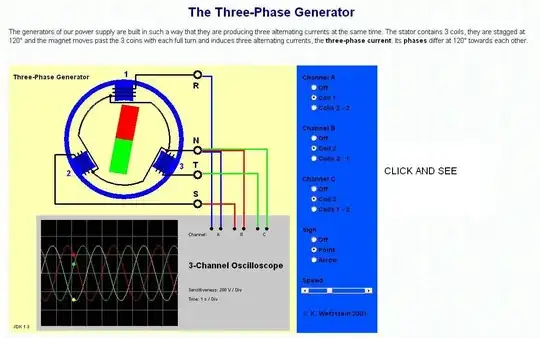

You can make a more powerful motor by using several voltages that are out of phase. The principle behind this is called polyphase power.

In communications, sinusoidal signals are used as carriers to allow multiple signals to be transmitted over the same medium at the same time. When working with such signals, it's often necessary to talk about phase, as in #1 above.

Mathematically, a lot of things can be described using the Fourier transform, which represents a signal as a sum of sinusoids at different frequencies, each with its own amplitude and phase. This is helpful because things like electrical circuits respond differently to different frequencies.

(Of course, no signal or process is really perfectly, eternally periodic. But ideal periodicity is a very useful mathematical approximation.)