This question is about obtaining a true RMS voltage reading from a running sampling ADC. Does this give me a true RMS reading, or where did I made a mistake?

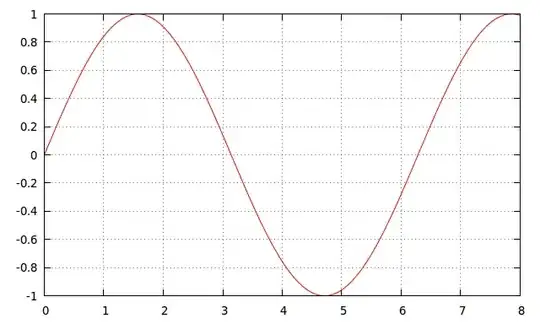

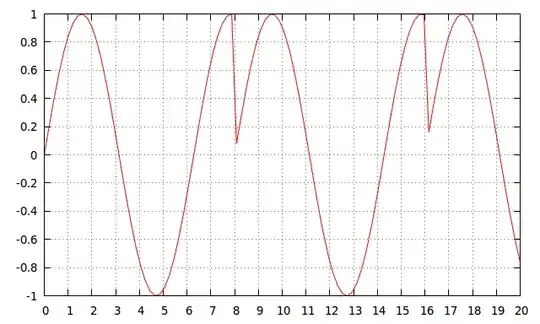

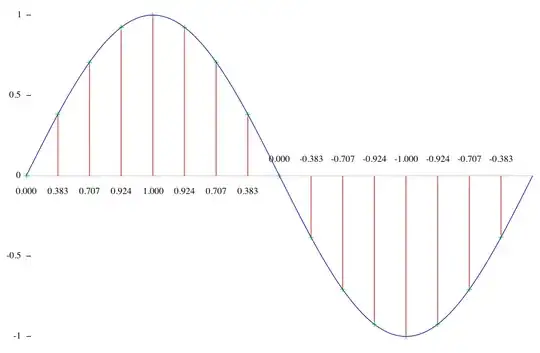

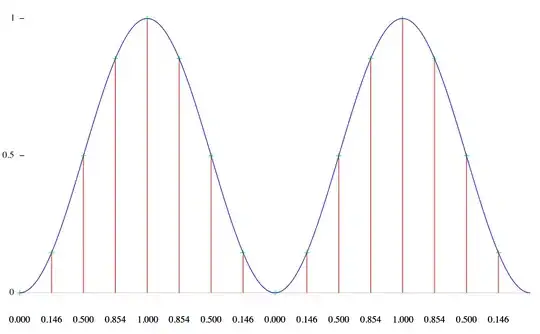

Construct a ring buffer of an large enough size and start writing ADC readings into it. The RMS of the signal is approximately the RMS of all numbers in that ring buffer.

-- EDIT --

Or is this a good idea:

Have a variable, n, as average of squares. Whenever a new sample \$k_i\$ comes this is calculated:

$$ n_{i+1} = \frac{2n_i + k_i^2}{2} $$

and RMS is calculated by taking square root of this number. (Taking square roots are more expensive than squareing it, even on a 12-core 2.5GHz Xeon)

Clarification

I am not talking about whether the ADC is RMS or not - actually my ADC captures instantaneous values. I am talking about how to take this instantaneous value and apply an algorithm to it, to approximate an RMS measurement.

I am also not talking about embedded programming with limited resources. The system in question, not relevant to this question, have a powerful server in it and it can (and will) be used to crunch the majority, if not all the numbers.