If I use a power source rated at 2.5v and 1 amps for power a standard red led , what should happen to led? Does it get burn for the high current value or not?

5 Answers

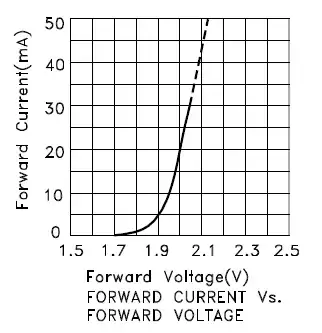

The relationship between voltage and current in a LED looks like an exponential curve. The picture below shows the relationship for a LED with a 2.0V forward voltage @ 20mA.

If this was connected to a 2.5V power source with no current limiting resistor, the amount of current flowing would be very high, much higher than the LED's rated peak forward current. The LED would fail catastrophically, probably with a pop and some smoke.*

If the the power supply was 2.5V @ say 10mA (a very small current that you probably wouldn't find) then the LED would draw more current at 2.5V than could be supplied, and the output voltage of the supply would drop.

*When I was a kid I had a 200-in-1 electronics project kit. As the batteries wore down I kept reducing the LED resistors until eventually I had no resistor at all. Then one day I changed the batteries and every LED blew. I was devastated.

- 4,921

- 2

- 23

- 41

-

1The 20 mA frequently suggested may be a "recommended maximum" currrent - LEDs will work at much lower current, if you don't need maximum brightness. I recently used a green LED with only 1 mA, and it is still brighter than I want! – Peter Bennett Jan 26 '15 at 23:20

-

1I like the anecdote! Everything is more interesting when you make a story out of it. – Keegan Jay Jan 26 '15 at 23:50

Assuming that by "standard LED" you are talking about LED rated for about 20mA at about 1.5V, yes it will probably burn. The current rating of the power supply has nothing to do with it. In order to match the circuit, you need to place a series resistor that will satisfy the LED rating. In given example, the voltage drop on the resistor should be \$1V\$, while the current is \$20mA\$. So the resistor value should be \$1/20mA=50\Omega\$

- 9,986

- 2

- 26

- 41

1 amp on your power supply only means that it CAN supply 1 amp. The current that flows through the circuit is determined by the components.

- 120

- 1

- 9

-

-

I first wrote that it wouldn't get destroyed but later realised that's wrong – mischnic Jan 26 '15 at 22:34

-

Basically my question was this.. If the power supply can provide 1 amp max and the led need about 20ma, do it take only 20ma or 1 amp? – user3437592 Jan 27 '15 at 08:06

Yes, it will (probably) burn out the LED.

I am assuming the LED is a standard red LED with a 1.8V voltage drop. Since the applied voltage is greater than this, you will get a large current. The supply maxes out at 1A, which is definitely enough to burn out a standard LED.

The right way to do this is put in a resistor that will produce the correct current given the supply voltage and the voltage drop of the LED, as Eugene Sh. says.

If your supply was rated for less, say 20mA, it would still apply 2.5V, but when the current gets above 20mA the supply would start to drop voltage and you would be saved. So in some sense the LED is burning out because of the high current rating of the supply, but as mischnic says it CAN supply 1A, it is not going to under all conditions. So the mismatch in voltage is the real problem. It is possible your LED wants exactly 2.5V and your supply is accurate enough to supply exactly that, in which case you would be ok. You need more information on the LED to know that (and need to tell us more for a more accurate answer).

- 1,066

- 7

- 9

Short answer: do not connect LEDs directly to power supply (unless it is a special constant current power supply designed for LEDs). You will burn it!

Long answer: LEDs have pretty stable constant voltage drop and can tolerate any current that is below its maximum specified (in datasheet) current. Most of standard through-hole LEDs (3 or 5mm diameter) can withstand <20mA only. Some (expensive) LEDs can have 3 Amps current flowing through, so you need to know the specs for your particular part.

In any case you have to provide a constant current for LED (not a constant voltage), in the range that it can accept. There are several ways to do it, the simplest is to have a current limiting resistor connected in series. Calculation of its value is really simple:

You need to know voltage drop and maximum current for your LED. The best way is to read datasheet. If you don't have one, it is an educated guess work. Simple red LEDs normally have around 1.8V drop. Let's say the current we want is 10mA.

Resistor value will be

$$R = \dfrac{V_{psu}-V_d}{I}$$

where:

- \$V_{psu} = 2.5V\$ (the power supply voltage)

- \$V_d = 1.8V\$ (for standard red LED)

- \$I\$ is the current we want to have (10mA = 0.01A)

$$R = \frac{2.5-1.8}{0.01} = 70 \Omega$$

Nearest common value is 68 Ω.