I'm attempting to emulate the modulation and demodulation of a PAL video frame in software, but I'm having trouble understanding how the AM (luma) and QAM (UV) components of the signal are separated during the demodulation process.

Background:

The software I'm writing takes an RGB bitmap, translates it to Y'UV using the BT.601 standard, generates (modulates) a signal as a series of samples, then saves those samples to a wave file. Those samples can then be loaded back in and demodulated back into a bitmap, with the H-sync pulses honoured against the H-pos ramp generator as an old CRT TV would do, and finally translated back into an RGB bitmap.

There are two motivations behind this project: first, to create some cool looking analog fuzz in software; and second, to better understand the TV standards and basic modulation / demodulation techniques in a domain (software) where I am more comfortable.

What I've got so far:

The code performs the modulation fine, and when the result is viewed in Audacity (with a sample rate faked at 1/1000th the real frequency) it shows a signal that I recognise to be a series of PAL picture lines. The full process works if I put it in black-and-white mode, thus omitting the QAM signal from the modulation step and leaving only the amplitude modulated luma channel. I've also tested the QAM modulation and demodulation code against a simple audio file, and it works great. The only bit I haven't got working is the image demodulation with both the AM luma and QAM colour components included.

The confusion:

Given a pure untouched QAM signal, it seems relatively trivial to demodulate the signal back to its original composite signals, by multiplying with the carrier sine and its cosine individually:

$$I_t = s_t \cos(2\pi f_c t)$$ $$Q_t = s_t \sin(2\pi f_c t)$$

The two components are then low-passed at the carrier frequency to remove high-frequency terms. This seems to work great, and as I noted above my implementation of QAM demodulation works just fine.

Similarly, I can implement simple AM demodulation, even when the signal is accompanied by other signals in separate parts of the frequency domain - it's just a case of high-pass and/or low-pass until you've got the bit you want.

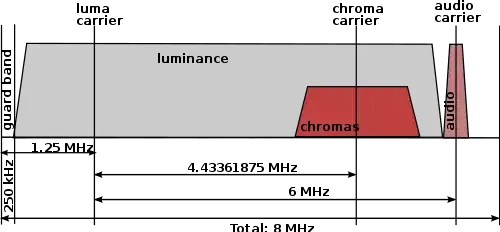

However, from what I can see, the QAM modulated UV component is added to the base AM luma signal, with both parts overlapping in the frequency domain, as the following diagram from Wikipedia appears to show:

At this point I'm a little stumped. It doesn't look like I can low-pass to get the AM luma signal due to the overlap, and I can't see how I'd subtract the QAM signal from the underlying AM.

What am I missing? What are the logical steps that should be taken to separate the luma and UV components?