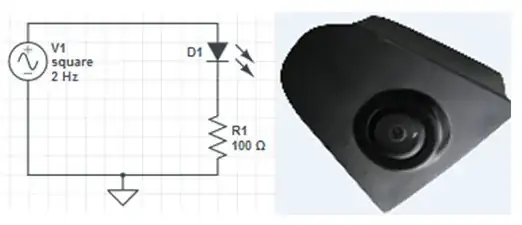

LED flashing light captured by camera

Displayed image detected by Photodiode

I have a 2V p-p square wave signal of 500µs. This signal drives a LED and when I measure the waveform across the resistor connected in series with the LED I get a square waveform. I have a camera which is takes this flashing light as an input and this camera signal I am displaying on the display. I have designed a Photodiode circuit ( photodiode and 100K resistor in series ) having a supply of 5V and placed this circuit next to display in dark room. When the flashing light of LED taken by camera is displayed the Photodiode is activated. But the problem is I am not able to see the variation in waveform as when it is activating, output is directly 5V DC. I need to see the variation in square waveform. I Want to calculate delay between camera input and output at Display. Please help me out for getting square wave across photodiode so that I can see the difference in waveform from LED and Photodiode. Let me know where I am going wrong and what are the steps I should follow.

Photodiode info: