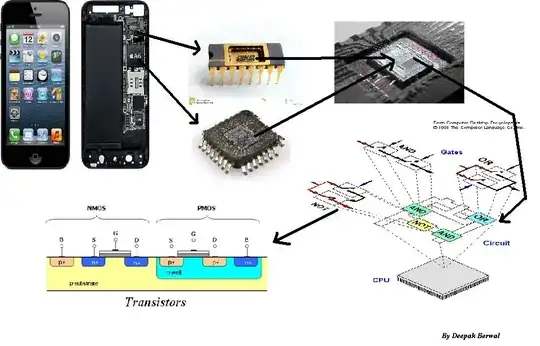

Aside from increasing raw storage capacities of RAM, cache, registers and well as adding more computing cores and wider bus widths (32 vs 64 bit, etc), it is because the CPU is increasingly complicated.

CPUs are computing units made up of other computing units. A CPU instruction goes through several stages. In the old days, there was one stage, and the clock signal would be as long as the worst-case time for all the logic gates (made from transistors) to settle. Then we invented pipe lining, where the CPU was broken up into stages: instruction fetch, decode, process and write result. That simple 4- stage CPU could then run at a clock speed of 4x the original clock. Each stage, is separate from the other stages. This means not only can your clock speed increase to 4x (at 4x gain) but you can now have 4 instructions layered (or "pipelined") in the CPU, resulting in 4x the performance. However, now "hazards" are created because one instruction coming in may depend on the previous instruction's result, but because it's pipelined, it won't get it as it enters the process stage as the other one exits the process stage. Therefore, you need to add circuitry to forward this result to the instruction entering the process stage. The alternative is to stall the pipeline which decreases performance.

Each pipeline stage, and particularly the process part, can be sub-divided into more and more steps. As a result, you end up creating a vast amount of circuitry to handle all the inter-dependencies (hazards) in the pipeline.

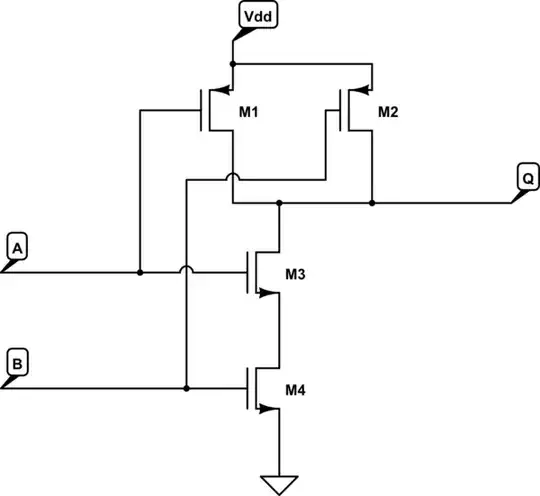

Other circuits can be enhanced as well. A trivial digital adder called a "ripple carry" adder is the easiest, smallest, but slowest adder. The fastest adder is a "carry look-ahead" adder and takes a tremendous exponential amount of circuitry. In my computer engineering course, I ran out of memory in my simulator of a 32-bit carry look-ahead adder, so I cut it in half, 2 16 bit CLA adders in a ripple-carry configuration. (Adding and subtracting are very hard for computers, multiplying easy, division is very hard)

A side affect of all this is as we shrink the size of transistors, and subdivide the stages, the clock frequencies can increase. This allows the processor to do more work so it runs hotter. Also, as the frequencies increase propagation delays become more apparent (the time it takes for a pipeline stage to complete, and for the signal to be available at the other side) Due to impedance, the effective speed of propagation is about 1 ft per nanosecond (1 Ghz). As your clock speed increases, it chip layout becomes increasingly important as a 4 Ghz chip has a max size of 3 inches. So now you must start including additional buses and circuits to manage all the data moving around the chip.

We also add instructions to chips all the time. SIMD (Single instruction multiple data), power saving, etc. they all require circuitry.

Finally, we add more features to chips. In the old days, your CPU and your ALU (Arithmetic Logic Unit) were separate. We combined them. The the FPU (Floating point unit) was separate, that got combined too. Now days, we add USB 3.0, Video Acceleration, MPEG decoding etc... We move more and more computation from software into hardware.