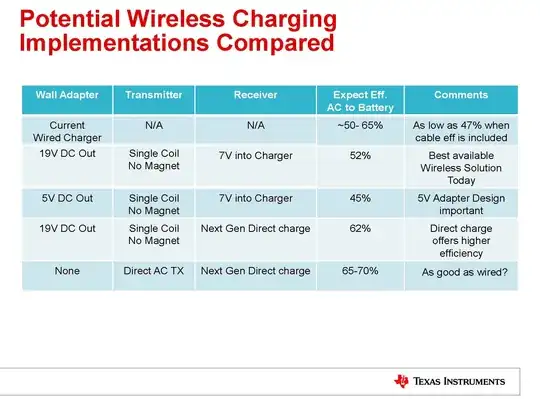

I am looking for data showing practical data/information on the efficiency of wireless (induction) transfer of electricity vs. wired transfer. There is some information out there but the source makes me hesitate at taking this at face value.

Assuming standard US mains power (120 V AC), charging an iPhone 5S battery (3.8 V 5.92 W·h (1560 mA·h)) from 0% to 100%, how many Watt-hours would be consumed from a standard wired connection vs. a non-standard wired connection?

If we take the presentation linked above at face value, and we work backwards, we would get:

Wired (best case): 5.92 / 85% / 90% / 90% / 95% = 9.05 Watt-hours (65.4% efficiency)

Wired (worst case): 5.92 / 85% / 80% / 80% / 95% = 11.46 Watt-hours (51.6% efficiency)

Wireless (best case): 5.92 / 85% / 89% / 89% / 95% / 80% = 11.57 (51.1% efficiency)

Wireless (worst case): 5.92 / 85% / 89% / 89% / 95% / 60% = 15.32 (38.3% efficiency)

Yet the conclusion of the presentation is:

The improvement over the standard charger seems to be based on the assumption that the efficiency of the device will improve if the charger is integrated in to the wireless receiver.

At the end of the day, what is the actual efficiency of a wired charger vs. a wireless charger? Can the wireless charger actually be an improvement in efficiency over a wired charger for things like charging an iPhone, or is it strictly a convenience-based change?