In the (very) old days things were slow and designers of logic circuits used discrete transistors and strange plate voltages like -15V to better confuse the vacuum tube guys (also because germanium PNP transistors were better for a period).

Then came vast (for the time) military demand for computers for navigation, and RTL logic was developed.

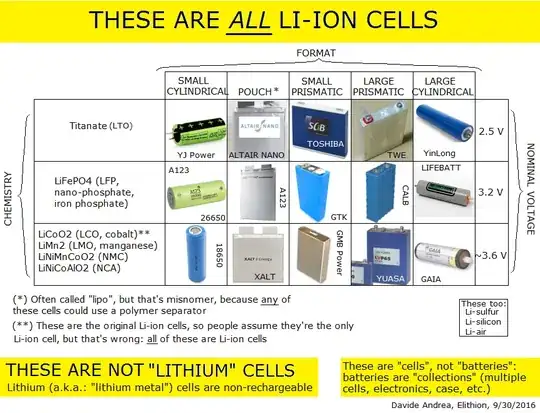

It was slow and power hungry, but it could be crammed into flat pack packages with a NOR gate or a flip-flop. It used 3.6V (my memory, verified by the RTL Logic Cookbook. which says up to 4.5V but 3.6 nominal) or 4V (schematics of the Apollo guidance computer power supply). Some other early documents indicate a 3V nominal supply.

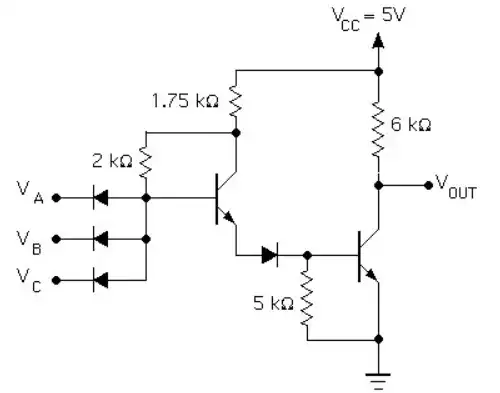

RTL begat DTL, which was the first, I believe, to use a single 5.0V nominal supply, in the form of the 930 series.

Millions of parts were being made at this point, mostly for military and such applications, so lots of ceramic packages (NASA Apollo documents indicate more than 700,000 pieces of the flat pack (1.27mm lead pitch) as they were phasing out TO-5 10-lead packages). Then came TTL, which was backward compatible with DTL, and rapidly replaced DTL, starting in the early-to-mid 1960s. For many years afterward, one would write "TTL/DTL compatible".

As an aside, around the same time, ECL logic with a -5.2 volt supply became mass-produced, but it was never used as widely as TTL.

Subsequent bipolar families (some more popular than others) such as 74L, 74H, 74S, 74F and 74LS all adopted the 5V supply.

Early MOS circuits used high voltages. To allow then to be used with TTL, they would have three supplies (+12, +5, and -5V). When early CMOS circuits were developed, a single 5V supply was a major selling point (early CMOS circuits would work at 5V but not very well). Eventually, it became possible to make CMOS equivalents of the 74xx series that worked as well, and better, than the originals.

Thus began a long period of relative stability during which 5V was the supply of choice for digital circuits (excepting ECL). This was fine from about 1965 to 2000 or so, but gradually it became less and less optimal as devices shrunk and power consumption requirements became more important commercially. We have again Balkanized supply situation with 1.8, 2.5, 3.3, and 5V supplies common. Choosing 5V for USB (and thence as a charger/power supply standard) means we'll continue to see 5V for many more years, if not decades.

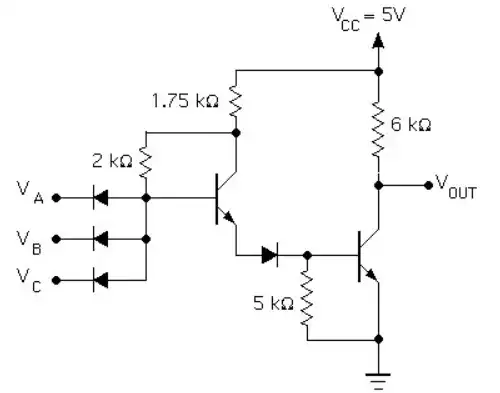

So, it would seem to boil down to the design decision of the folks making a logic family aimed at missiles and such like that they would best use another volt or so beyond what RTL commonly used, and the tremendous pull of backward compatibility with legacy requirements. Why the extra volt or volt and a half, you ask? DTL inputs had effectively three series Si diode drops (see schematic above), and you'd want some reasonable voltage above that for the pull-up resistors to work, even with a supply 10% low and -55C temperature (military) so 4V was a bit too low, 6V unnecessarily high, and 5V about right.

It's not quite in the same class as the claim that space shuttle booster dimensions were determined by the width of two Roman horse butts, but it's already spanning two human generations.

A 60W light bulb is 1900s technology (very inefficient) emitting only a few watts of visible light but also a whole bunch of IR, according to Planck's law). An equivalent (in visible light) LED light uses little more power than 10W. Eventually we may get down to somewhat less, perhaps 5 or 6W. A high end PC CPU might use around the same amount of power as an incandescent bulb (tightly regulated DC power) so it's pretty hard to cool. The cost of computing in power per FLOP tends to drop every year, as does the cost per lumen of light, but the technology changes. Incandescent lamps are a mature technology (the most recent major improvement was halogen).