I've always thought P = U * I (units watt, volt and ampere, respectively), but I ran across this discussion on a forum:

If you look in your panel there are 2 “hot wires coming in from the pole”. 1 is the 120v A phase and 1 is the 120v B phase. you need a hot leg from each one to make 240V. if you have a machine drawing 20 amps on 120V it is drawing it from 1 phase. If you have the same machine drawing 10 amps on 240V it is drawing 10 from the A phase and 10 from the B phase.

The easiest motor for me to read the name plate on is a 1.5 HP 3450 rpm Baldor motor. It was on the shelf. It states at 115V it draws 13.2 amps. next it states 230V it draws 6.6 amps.

“amps x volts = watts”

13.2×115 = 1518

6.6 x 230 = 1518

no matter what you do it will draw the same amperage total.

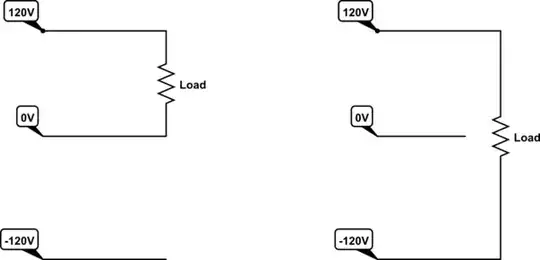

To me this explanation contradicts itself since it says that the motor draws both the same current and the same power. Is the motor actually drawing 6.6 A from each hot wire (for a total of 13.2 A), or is it drawing 3.3 A from each hot wire? If it's 6.6 A from each hot wire, it seems the classic "current x voltage = power" equation is misleading for 240 V "single-phase" circuits.

Update: to clarify, this question applies to 120 V and 240 V current in the US.