I want to increase the effective resolution of the ADC by oversampling and decimation. Unfortunately the signal I'm realing is too clean, so I would like to add a bit of artificial noise (1LSB peak to peak) to a signal.

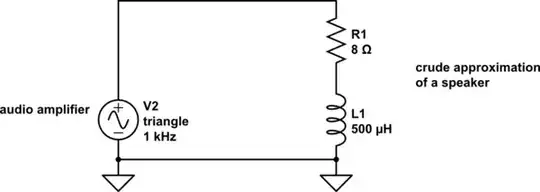

I would like to use MCU timer to output a square wave, convert it to triangle-like wave and add it to the signal.

Below is my attempt, but it does not work as expected - the amount of noise added varies with the level of the analog signal.

Can someone enlighten me on this topic? How do I do this properly?

simulate this circuit – Schematic created using CircuitLab